DeepSeek Debrief: >128 Days Later

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Reinforcement learning from human feedback

13 min read

The article discusses how DeepSeek 'continued to scale RL after release' to improve their model. Understanding RLHF provides essential context for how modern AI reasoning models like R1 are trained and refined, which is central to the DeepSeek story.

-

Export control

14 min read

The article explicitly mentions export controls limiting China's capability in serving models and inferencing at scale. Understanding the history and mechanics of export controls provides crucial geopolitical context for why DeepSeek chose to open source their model.

SemiAnalysis is hiring an analyst in New York City for Core Research, our world class research product for the finance industry. Please apply here

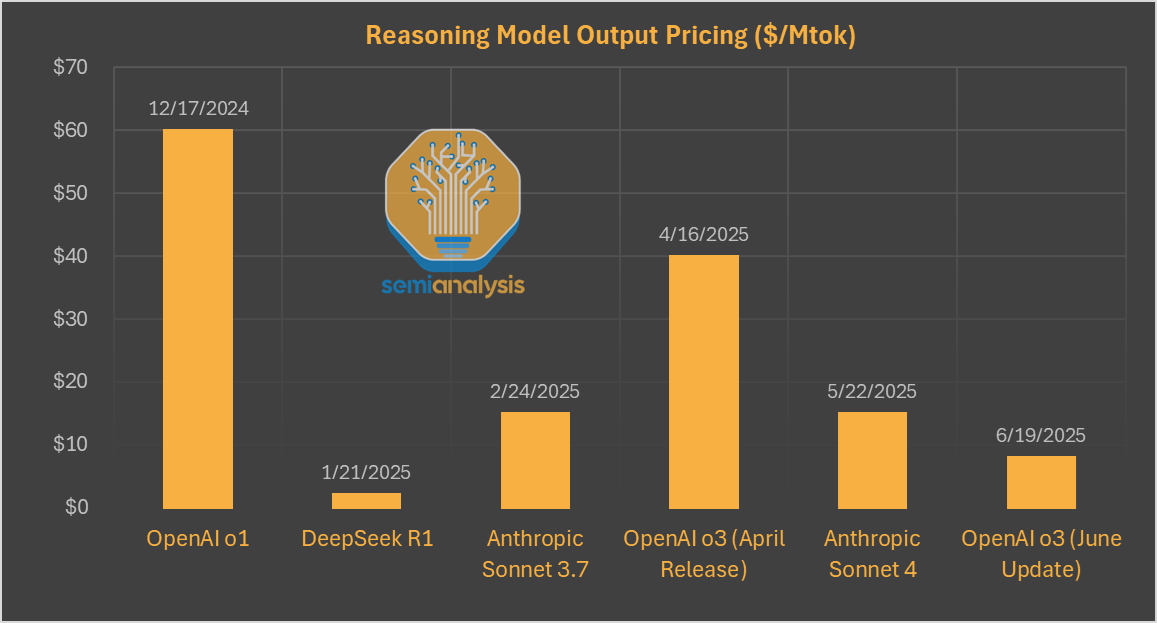

It’s been a bit over 150 days since the launch of the Chinese LLM DeepSeek R1 shook stock markets and the Western AI world. R1 was the first model to be publicly released that matched OpenAI’s reasoning behavior. However, much of this was overshadowed by the fear that DeepSeek (and China) would commoditize AI models given the extremely low price of $0.55 input/$2.19 output, undercutting the then SOTA model o1 by 90%+ on output token pricing. Reasoning model prices have dropped significantly since, with OpenAI recently dropping their flagship model price by 80%.

R1 got an update as DeepSeek continued to scale RL after release. This resulted in the model improving in many domains, particularly coding. This continuous development and improvement is a hallmark of the new paradigm we previously covered.

Today we look at DeepSeek’s impact on the AI model race and the state of AI market share.

A Boom and... Bust?

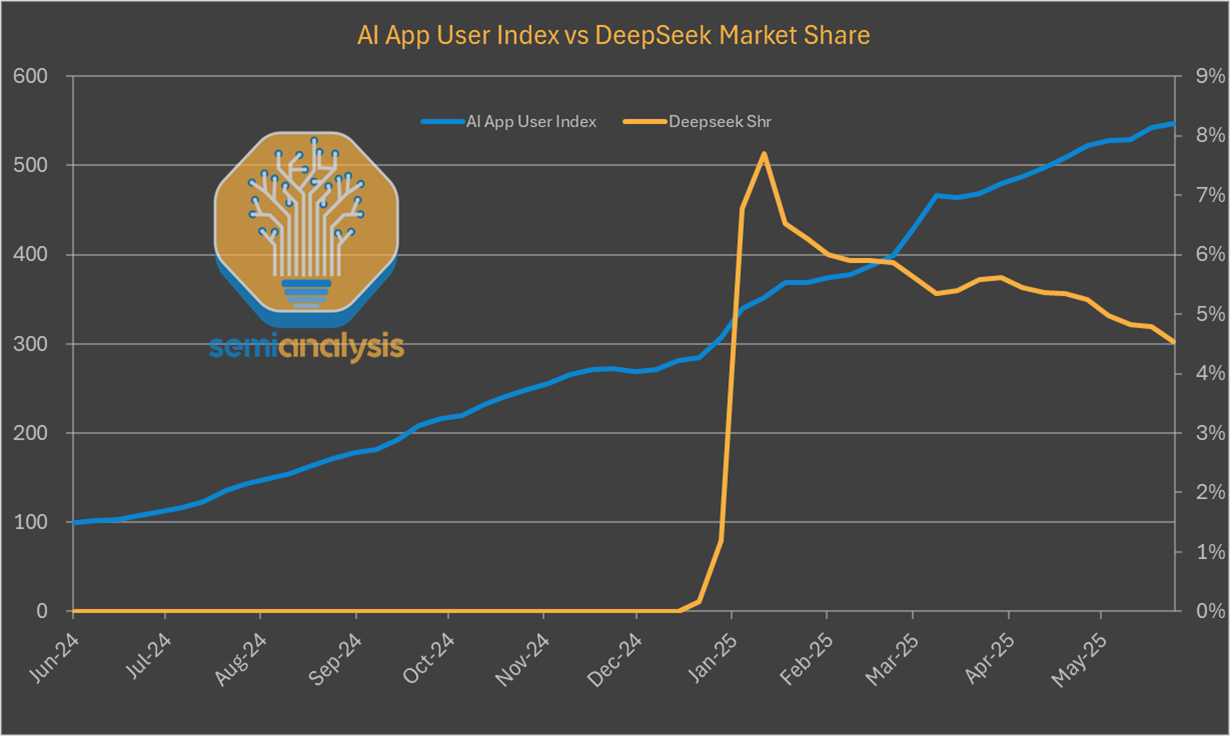

Consumer app traffic to DeepSeek spiked following release, resulting in a sharp increase in market share. Because Chinese usage is poorly tracked and Western labs are blocked in China, the numbers below understate DeepSeek’s total reach. However the explosive growth has not kept pace with other AI apps and DeepSeek market share has since declined.

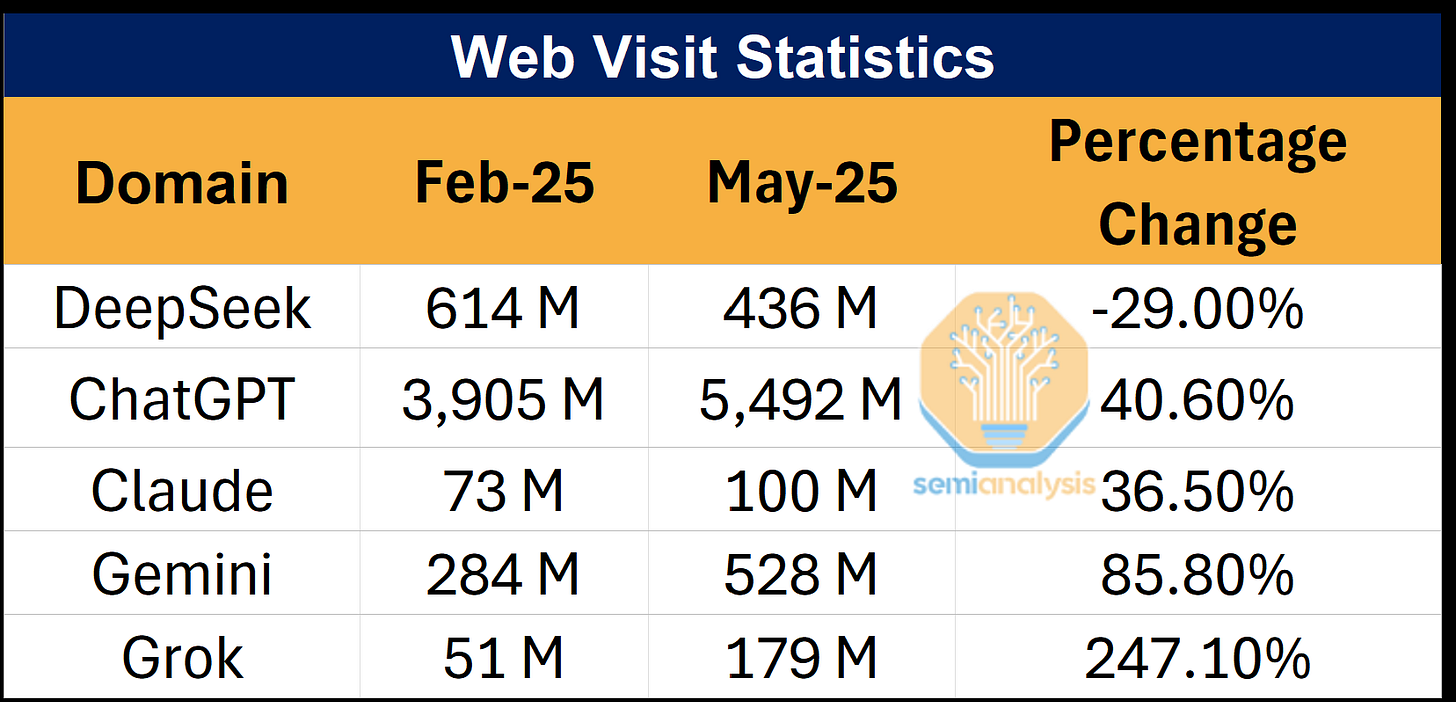

For web browser traffic, the data is even more grim with DeepSeek traffic down in absolute terms since release. The other leading AI model providers have all seen impressive growth in users over the same time frame.

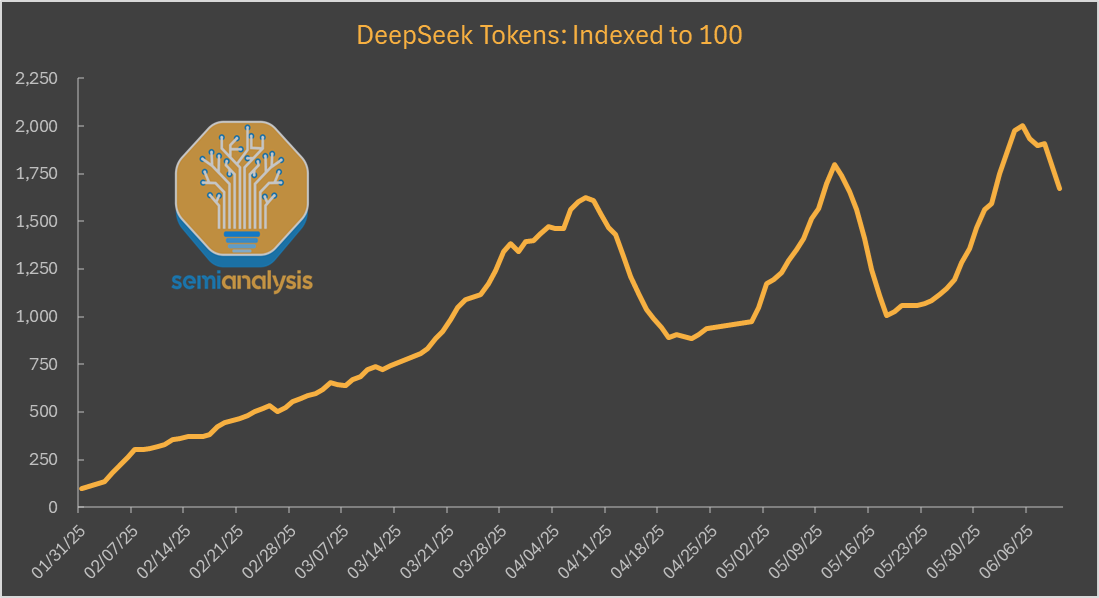

The poor user momentum for DeepSeek-hosted models stands in sharp contrast to third party hosted instances of DeepSeek. Aggregate usage of R1 and V3 on third party hosts continues to grow rapidly, up nearly 20x since R1 first released.

Digging deeper into the data, by splitting out the DeepSeek tokens into just those hosted by the company itself, we can see that DeepSeek’s share of total tokens continues to fall every month.

So why are users shifting away from DeepSeek’s own web app and API service in favor of other open source providers despite the rising popularity of DeepSeek’s models and the apparently very cheap price?

The answer lies in tokenomics and the myriad of

...This excerpt is provided for preview purposes. Full article content is available on the original publication.