How Meta Built a New AI-Powered Ads Model for 5% Better Conversions

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Knowledge distillation

13 min read

The article describes GEM's 'teacher-student architecture' where a large model trains smaller models - this is the formal ML technique called knowledge distillation, and understanding its origins and mechanics would give readers deeper insight into why Meta chose this approach

-

Transformer (deep learning)

15 min read

GEM's InterFormer component is built on transformer architecture with its interleaving attention layers. Understanding the foundational transformer concept helps readers grasp why this architecture enables processing long behavioral sequences efficiently

-

Recommender system

14 min read

The article states GEM is 'the largest foundation model ever built for recommendation systems' - understanding the history and evolution of recommender systems from collaborative filtering to modern deep learning approaches provides essential context for appreciating GEM's significance

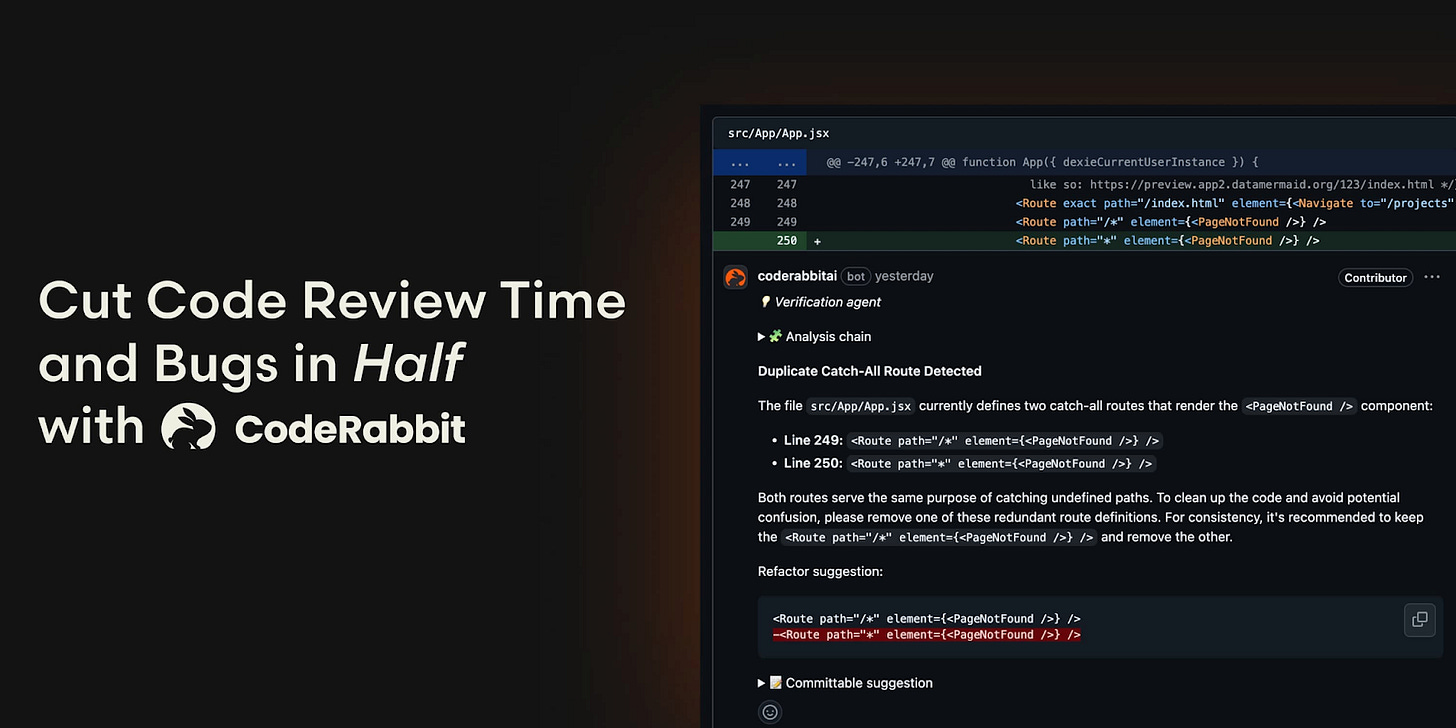

Cut Code Review Time & Bugs in Half (Sponsored)

Code reviews are critical but time-consuming. CodeRabbit acts as your AI co-pilot, providing instant Code review comments and potential impacts of every pull request.

Beyond just flagging issues, CodeRabbit provides one-click fix suggestions and lets you define custom code quality rules using AST Grep patterns, catching subtle issues that traditional static analysis tools might miss.

CodeRabbit has so far reviewed more than 10 million PRs, installed on 2 million repositories, and used by 100 thousand Open-source projects. CodeRabbit is free for all open-source repo’s.

Disclaimer: The details in this post have been derived from the details shared online by the Meta Engineering Team. All credit for the technical details goes to the Meta Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

When Meta announced in Q2 2025 that its new Generative Ads Model (GEM) had driven a 5% increase in ad conversions on Instagram and a 3% increase on Facebook Feed, the numbers might have seemed modest.

However, at Meta’s scale, these percentages translate to billions of dollars in additional revenue and represent a fundamental shift in how AI-powered advertising works.

GEM is the largest foundation model ever built for recommendation systems. It has been trained at the scale typically reserved for large language models like GPT-4 or Claude. Yet here’s the paradox: GEM is so powerful and computationally intensive that Meta can’t actually use it directly to serve ads to users.

Instead, the company developed a teacher-student architecture that lets smaller, faster models benefit from GEM’s intelligence without inheriting its computational cost.

In this article, we look at how the Meta engineering team built GEM and the challenges they overcame.

👋 Goodbye low test coverage and slow QA cycles (Sponsored)

Bugs sneak out when less than 80% of user flows are tested before shipping. However, getting that kind of coverage (and staying there) is hard and pricey for any team.

QA Wolf’s AI-native solution provides high-volume, high-speed test coverage for web and mobile apps, reducing your organization’s QA cycle to minutes.

They can get you:

80% automated E2E test coverage in weeks—not years

Unlimited

This excerpt is provided for preview purposes. Full article content is available on the original publication.