Qualcomm's Hexagon AI Accelerators

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Digital signal processing

11 min read

Linked in the article (8 min read)

-

Audio signal processing

13 min read

Linked in the article (6 min read)

-

Speech processing

16 min read

Linked in the article (5 min read)

This is a part free and part paid post. The first part, discussing Qualcomm’s recently announced AI200 and AI250 AI accelerators and the history of the Hexagon architecture is free, whilst the detailed look at the Hexagon architecture that follows is paid. None of what follows is investment advice.

You can upgrade to a paid subscription and support The Chip Letter using the button below.

“Qualcomm’s Hexagon might just be the most important architecture that no-one is talking about”

On 28 Oct 2025 Qualcomm announced two new data-centre AI inference servers, the AI200 and AI250. According to the press release:

Qualcomm AI200 and AI250 solutions deliver rack-scale performance and superior memory capacity for fast data center generative AI inference at industry-leading total cost of ownership (TCO). Qualcomm AI250 introduces an innovative memory architecture, offering a generational leap in effective memory bandwidth and efficiency for AI workloads.

Qualcomm also announced a partnership with Saudi Arabia’s HUMAIN to deploy its new data-centre technology:

Under the program, HUMAIN is targeting 200 megawatts starting in 2026 of Qualcomm AI200 and AI250 rack solutions to deliver high-performance AI inference services in the Kingdom of Saudi Arabia and globally.

The AI200 won’t be available until the end of 2026 and the AI250 until 2027 - an age in terms of the AI server market - and many are sceptical about Qualcomm’s chances of success in a market with lots of competitors. Qualcomm already has AI server products in the shape of the AI100 series and their lower cost cousins the AI80 series, announced as far back as 2019, which have made very little impression.

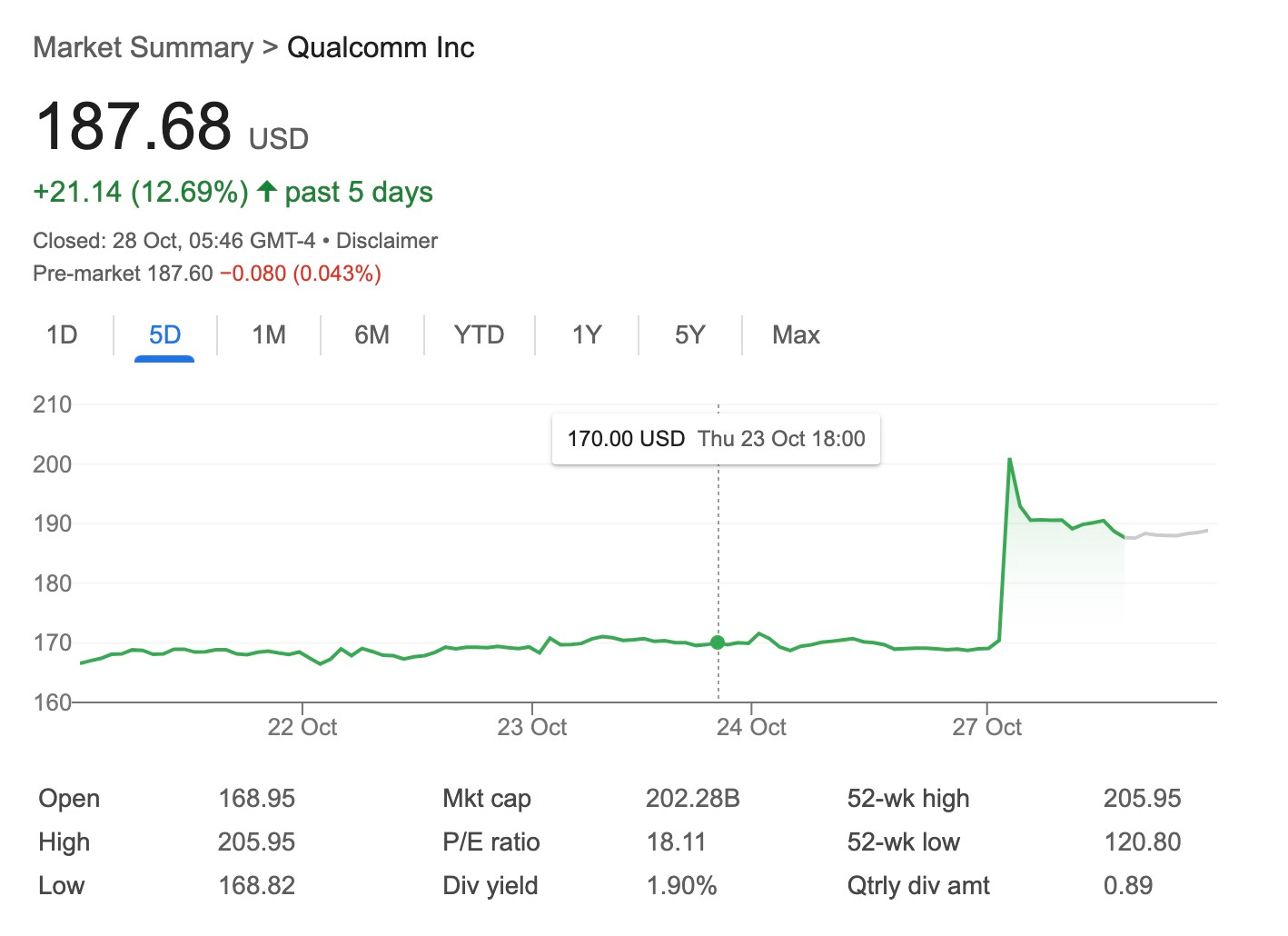

Despite all this the AI200 and AI250 announcements led to a pop in Qualcomm’s share price on the day.

That’s an extra $20 billion or so in market value which seems a lot but is of course less than 1/2% of Nvidia’s market cap!

What is in the AI200 and AI250 to cause this excitement? Qualcomm are leading with ‘low total cost of ownership’ as the key selling point of the new designs.

Part of this is probably Qualcomm’s use of LPDDR memory rather than the more expensive and scarce HBM. This should allow higher memory capacity - Qualcomm quotes up to 768GB per card - as well as reducing costs.

The idea of avoiding HBM isn’t new though, with Groq in particular apparently building a promising architecture and business on the

...This excerpt is provided for preview purposes. Full article content is available on the original publication.