The Shape of AI: Jaggedness, Bottlenecks and Salients

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Reverse salient

12 min read

The article explicitly references Thomas Hughes's concept of 'reverse salients' - the single technical or social problem holding back a system from advancing. This systems theory concept from the history of technology is directly relevant and likely unfamiliar to most readers.

-

Theory of constraints

14 min read

The article's central argument about bottlenecks limiting AI capability directly parallels Goldratt's Theory of Constraints from operations management. This would provide readers with a formal framework for understanding why 'a system is only as functional as its worst components.'

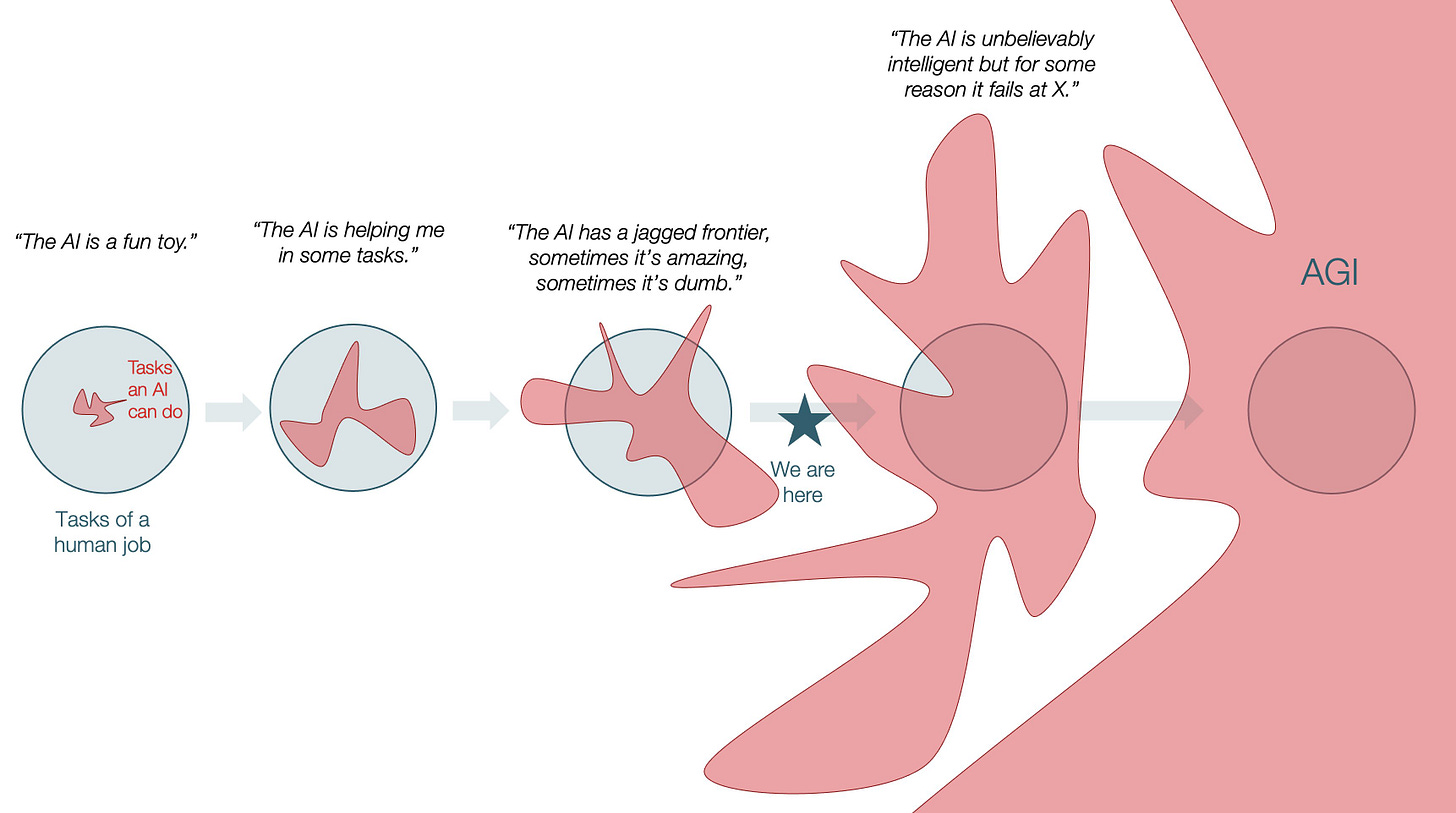

Back in the ancient AI days of 2023, my co-authors and I invented a term to describe the weird ability of AI to do some work incredibly well and other work incredibly badly in ways that didn’t map very well to our human intuition of the difficulty of the task. We called this the “Jagged Frontier” of AI ability, and it remains a key feature of AI and an endless source of confusion. How can an AI be superhuman at differential medical diagnosis or good at very hard math (yes, they are really good at math now, famously outside the frontier until recently) and yet still be bad at relatively simple visual puzzles or running a vending machine? The exact abilities of AI are often a mystery, so it is no wonder AI is harder to use than it seems.

I think jaggedness is going to remain a big part of AIs going forward, but there is less certainty over what it means. Tomas Pueyo posted this viral image on X that outlined his vision. In his view, the growing frontier will outpace jaggedness. Sure, the AI is bad at some things and may still be relatively bad even as it improves, but the collective human ability frontier is mostly fixed, and AI ability is growing rapidly. What does it matter if AI is relatively bad at running a vending machine, if the AI still becomes better than any human?

While the future is always uncertain, I think this conception misses out on a few critical aspects about the nature of work and technology. First, the frontier is very jagged indeed, and it might be that, because of this jaggedness, we get supersmart AIs which never quite fully overlap with human tasks. For example, a major source of jaggedness is that LLMs do not remember new tasks and learn from them in a permanent way. A lot of AI companies are pursuing solutions to this issue, but it may be that this problem is harder to solve than researchers expect. Without memory, AIs will struggle to do many tasks humans can do, even while being superhuman in other areas. Colin Fraser drew two examples of what this sort of AI-human overlap might look like. You can see how AI is indeed superhuman in some areas, but in others it is either far below human level or not

...This excerpt is provided for preview purposes. Full article content is available on the original publication.