Bringing AI solution to the end-user

The most exciting part? Experimenting with AI and developing the core technical solution.

The most daunting—and often overlooked—challenge? Deploying it to production.

An AI solution is useless until it’s deployed. Period. That’s why we knew from the start that we needed MLOps expertise to deliver value from end to end.

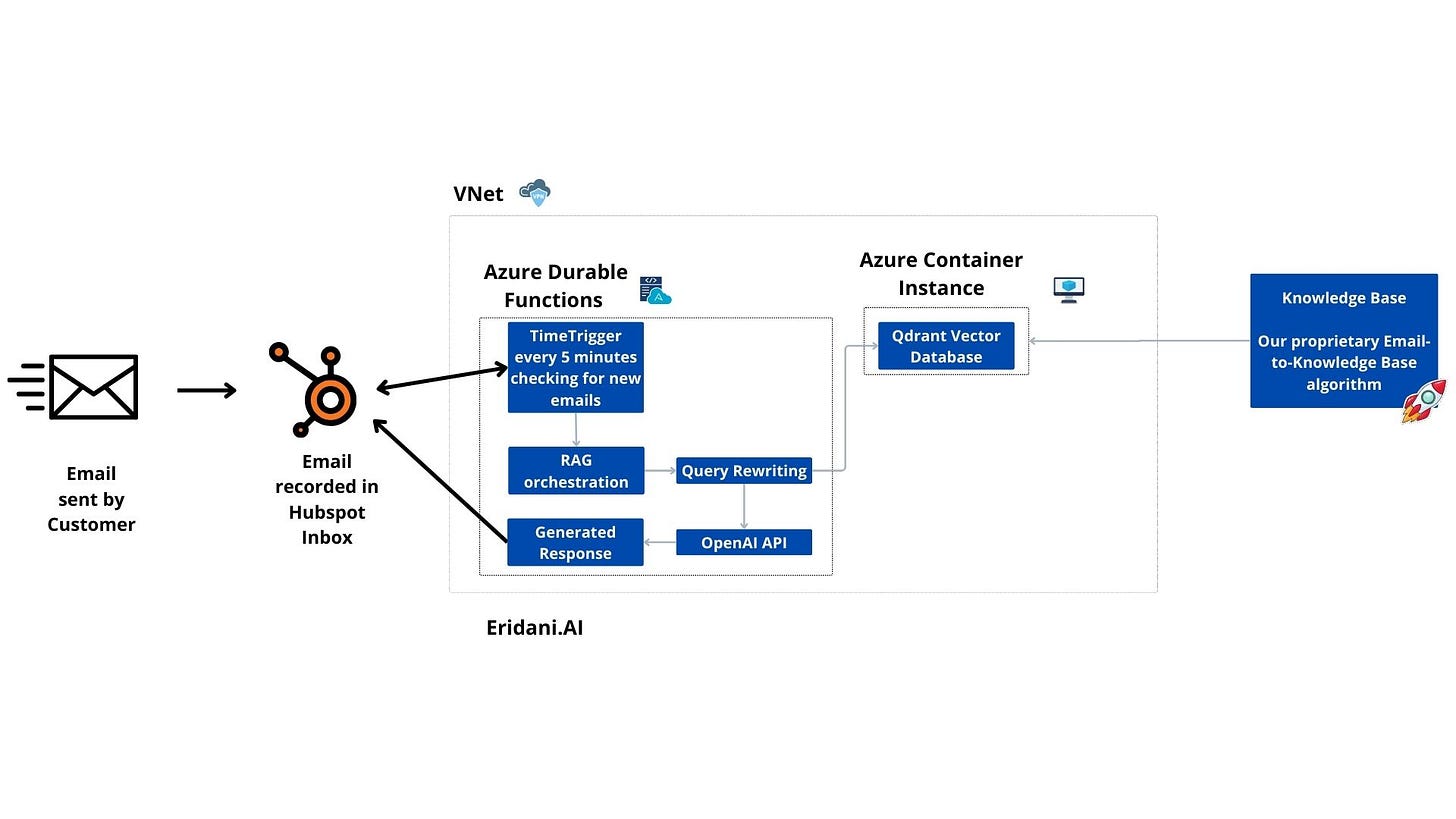

In theory, the RAG (Retrieval-Augmented Generation) space offers ready-to-use building blocks for deployment. However, in our experience, they lack the flexibility we need:

No built-in integration with HubSpot, the tool of choice of our client.

The off-the-shelf RAG component was too basic and didn’t account for our required preprocessing and messy knowledge base.

The architecture was rigid, limiting our ability to customize it for the client’s needs.

We tested off-the-shelf solutions, but they simply didn’t work—they returned incorrect answers to the questions sent to customer support. The correctness level was well below the acceptance threshold of the customer and the project would fail if we couldn't get off the ready-made solutions.

After reading this article you will have a clear idea on how you can deploy your RAG application which is more complex than basic tutorial examples.

We outline our architectural decisions, trade-offs, and the rationale behind them, demonstrating that while some choices come with limitations, they were made consciously with the current operational scope in mind.

Our goal was to create a robust, yet cost-efficient system tailored to the customer's needs while avoiding overengineering.

The system was designed with a clear set of assumptions to ensure efficiency, cost-effectiveness, and rapid implementation

With an estimated volume of 500–600 emails per month, the focus was on scaling to meet this demand rather than over-engineering for hypothetical future growth. This strategic decision helped avoid unnecessary costs and complexity while ensuring a robust and stable system. The primary goal was to rapidly implement and validate the system’s usefulness, prioritizing deployment speed and real-world client feedback over building a long-term, future-proof solution.

As a key principle was to avoid over-engineering—no premature optimizations were made for future scaling that may never be needed. Instead, we made conscious technological choices with two principles in mind - safety and savings.

It turns out that Azure deployments can be expensive and if you are a small business a couple of hundred dollars per month can be substantial in your budget. We agreed that 500 dollars per month of fixed costs is the upper limit. It meant that

...This excerpt is provided for preview purposes. Full article content is available on the original publication.