How Netflix Built a Distributed Write Ahead Log For Its Data Platform

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Message queue

12 min read

The article discusses Kafka and Amazon SQS as message delivery mechanisms with retry and delay support. The Wikipedia article covers the broader history of message-oriented middleware, different queue semantics (at-least-once, exactly-once), and the evolution from mainframe systems to modern distributed architectures.

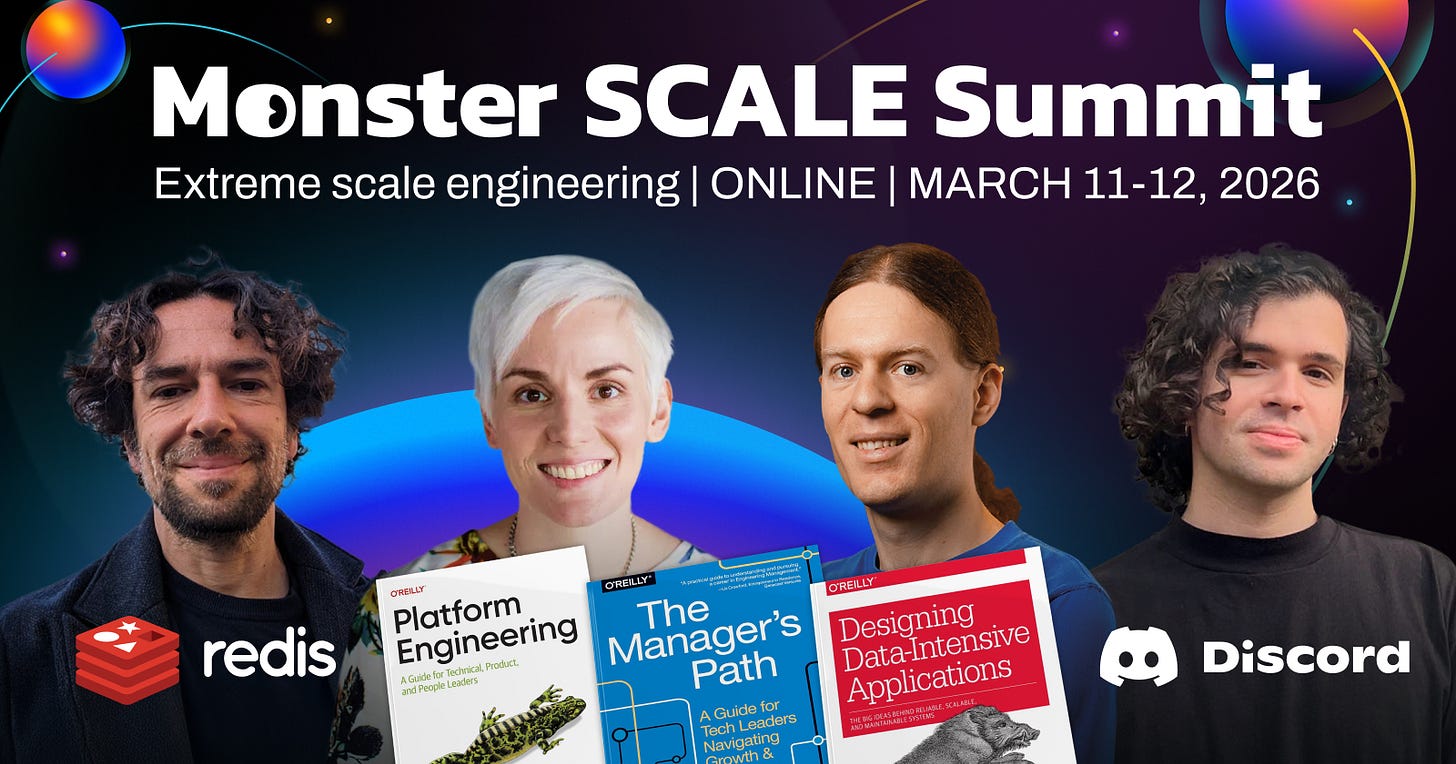

Monster SCALE Summit 2026 (Sponsored)

Extreme Scale Engineering | Online | March 11-12

Your free ticket to Monster SCALE Summit is waiting — 30+ engineering talks on data-intensive applications

Monster SCALE Summit is a virtual conference that’s all about extreme-scale engineering and data-intensive applications. Engineers from Discord, Disney, LinkedIn, Pinterest, Rivian, American Express, Google, ScyllaDB, and more will be sharing 30+ talks on topics like:

Distributed databases

Streaming and real-time processing

Intriguing system designs

Massive scaling challenge

Don’t miss this chance to connect with 20K of your peers designing, implementing, and optimizing data-intensive applications – for free, from anywhere.

Register now to save your seat, and become eligible for an early bird swag pack!

Disclaimer: The details in this post have been derived from the details shared online by the Netflix Engineering Team. All credit for the technical details goes to the Netflix Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

Netflix processes an enormous amount of data every second. Each time a user plays a show, rates a movie, or receives a recommendation, multiple databases and microservices work together behind the scenes. This functionality is supported using hundreds of independent systems that must stay consistent with each other. When something goes wrong in one system, it can quickly create a ripple effect across the platform.

Netflix’s engineering team faced several recurring issues that threatened the reliability of their data. Some of these included accidental data corruption after schema changes, inconsistent updates between storage systems such as Apache Cassandra and Elasticsearch, and message delivery failures during transient outages. At times, bulk operations like large delete jobs even caused key-value database nodes to run out of memory. On top of that, some databases lacked built-in replication, which meant that regional failures could lead to permanent data loss.

Each engineering team tried to handle these issues differently. One team would build custom retry systems, another would design its own backup strategy, and yet another would use Kafka directly for message delivery. While these solutions worked individually, they created complexity and inconsistent guarantees across Netflix’s ecosystem. Over time, this patchwork approach increased maintenance costs and made debugging

...This excerpt is provided for preview purposes. Full article content is available on the original publication.