What should we do about kids and AI?

Welcome back to Techno Sapiens, and Happy (belated) Father’s Day!

I’m Jacqueline Nesi, a psychologist and professor at Brown University and mom of two young kids who currently answer only to “Marshall” and “Rubble.”1

If you like Techno Sapiens, please consider sharing it with a friend today.

6 min read

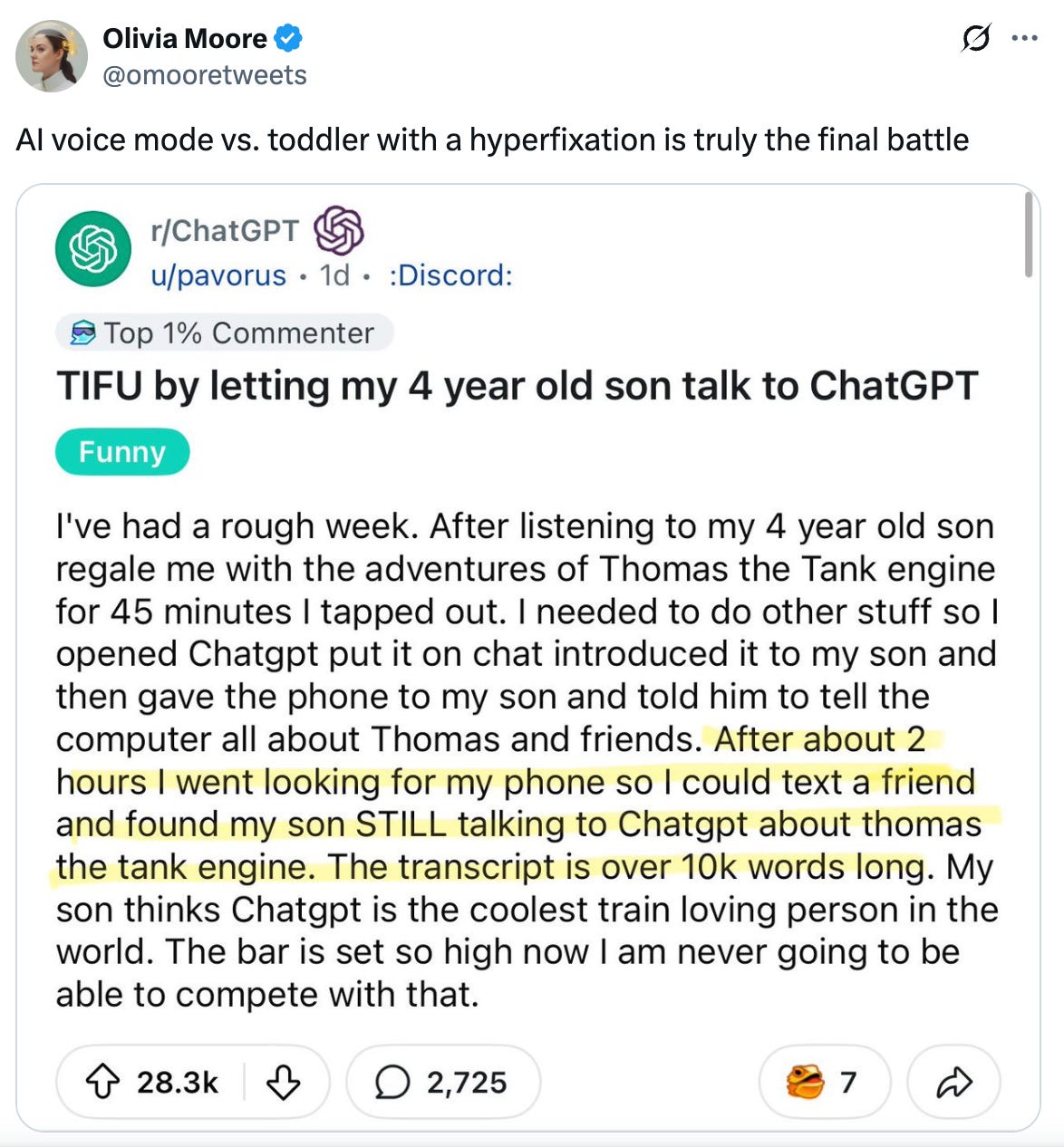

My husband recently sent me a post he came across on X:

My first reaction was to laugh. We imagined our preschool staging a similar filibuster, maybe with a focus on obscure sea creatures, or highly-specific features of boats.

My second reaction was concern. We agreed that letting a child loose unsupervised with an AI chatbot is, generally, risky. There are no protections in place for kids, and content may not always be age-appropriate. Even with adult supervision, my 3-year-old once asked ChatGPT whether there were any corgis on the Titanic—a reasonable and urgent question—and was told that there were, but “sadly, they all perished.”2 Maybe not what he was ready to hear.

My third reaction, though, was a thought: It doesn’t have to be this way! Why, exactly, are there no protections in place for younger users? This is relevant for young kids like mine, but maybe even more so for adolescents, who are are increasingly using AI tools independently.

The potential benefits of generative AI are incredible and transformative, but right now, the landscape is the Wild West. The risks are considerable, and there’s a collective burying-our-heads-in-the-sand situation going on. How can we protect younger users? How can we avoid the same mistakes we’ve made with other emerging tech?

Well, our friends at the American Psychological Association have some ideas of where to start. From the people3 that brought you the APA Health Advisory on Social Media Use in Adolescence, we’ve got a new report: Artificial Intelligence and Adolescent Well-Being.

There’s something in here for everyone: parents, tech companies, educators, policy-makers, and more.

So, let’s walk through the recommendations, along with my brief translation of each.

1. Ensure healthy boundaries with simulated human relationships

We do not want AI replacing (or displacing) adolescents’ real-life human relationships.

To protect against this, AI tools might offer regular reminders to teens that they’re interacting with a chatbot or encourage them to connect with trusted humans (especially if they’re struggling with something). We can also teach kids about the limits of what AI can do (see #9 below).

2.

...This excerpt is provided for preview purposes. Full article content is available on the original publication.