MiniMax M2 and Kimi-Linear: Why Full Attention Still Wins

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Attention (machine learning)

12 min read

The entire article centers on the debate between full attention, linear attention, and hybrid attention mechanisms in transformer architectures. Understanding the technical foundations of attention mechanisms would give readers crucial context for evaluating the claims made by MiniMax and Moonshot AI.

-

Transformer (deep learning)

15 min read

The article discusses architectural choices in large language models, including multi-head attention, KV caches, and scaling laws. The transformer architecture is the foundation upon which all these models are built, and understanding its structure helps readers grasp why attention mechanism choices matter so much.

-

Neural scaling law

13 min read

MiniMax's argument that 'scaling laws usually give you safer, more predictable gains than swapping out attention' directly references neural scaling laws. Understanding how model performance scales with compute, data, and parameters is essential context for the efficiency vs. quality tradeoffs discussed throughout the article.

Hi Everyone,

In this edition of The Weekly Kaitchup, let’s talk about linear/hybrid attention vs. full attention.

MiniMax just shipped an open-source, agent- and code-oriented model with about 10B parameters active out of roughly 230B.

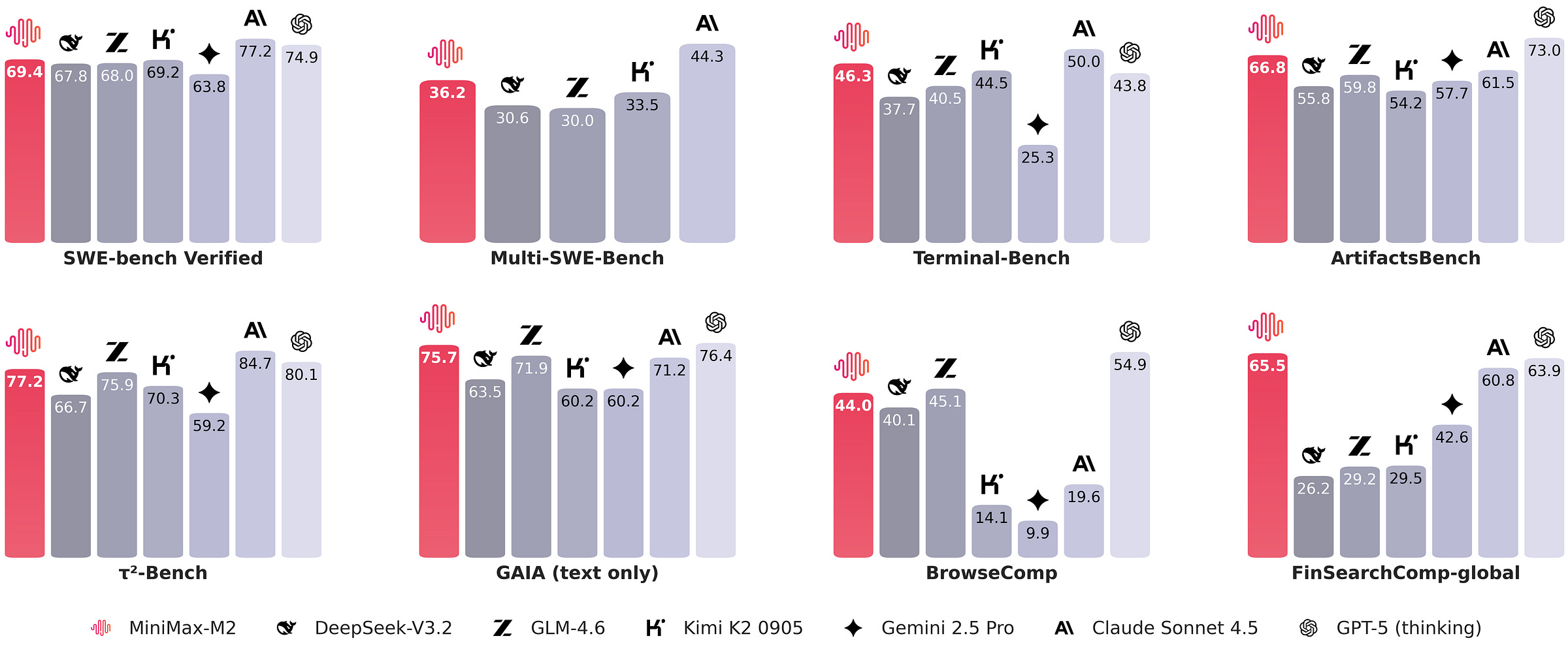

The model performs very well on benchmarks.

What I want to dig into is this: earlier MiniMax models aligned with the current trend toward hybrid attention (see Qwen3-Next, LFM, Granite 4.0, etc.), but with this release, they’ve gone back to plain full attention.

And they justify it pretty convincingly on X (original post in Chinese here).

Their core claim is: full attention still wins because it’s the least fragile choice across tasks, model sizes, and inference stacks. “Efficient” attention isn’t dead, it’s just not mature enough to be the default for a system that has to do code, math, agents, multimodality, long-chain reasoning, and RL on top. The problem isn’t the theory but rather the long list of conditions that all have to line up before an “efficient” design is actually efficient in production.

If your real objective is “same quality with fewer tokens,” scaling laws usually give you safer, more predictable gains than swapping out attention.

Hybrid attention looks good on public leaderboards, but MiniMax says it broke down on higher-order, multi-hop reasoning once they scaled the models. To even see that, they had to build new internal proxy metrics, and even those can drift as the model or data mixture changes.

Full attention, meanwhile, sits on years of kernel and inference engineering. Linear and sparse attention don’t. To reach the theoretical crossover point where they’re clearly better, you still need:

low-precision state that doesn’t nuke stability,

cache-friendly layouts that match real conversational traffic,

speculative decoding paths that work with nonstandard attention.

Until all of those land together, the speed/price gains get eaten by IO, precision quirks, or serving constraints.

But their most important point is about evaluation:

When you change a primitive like attention, you should assume public benchmarks will understate the damage. You need longer, richer, sometimes slower evals to surface regressions in reasoning, agentic behavior, and RL stability. Those evals are very expensive, but without them you can’t honestly claim the model is “efficient,” because whatever you lost will be repaid later as extra engineering, extra data, or extra compute. I 100% agree with that!

Qwen3-Next is a nice illustration. It’s an 80B model that does

...This excerpt is provided for preview purposes. Full article content is available on the original publication.