We Looked at 78 Election Deepfakes. Political Misinformation is not an AI Problem.

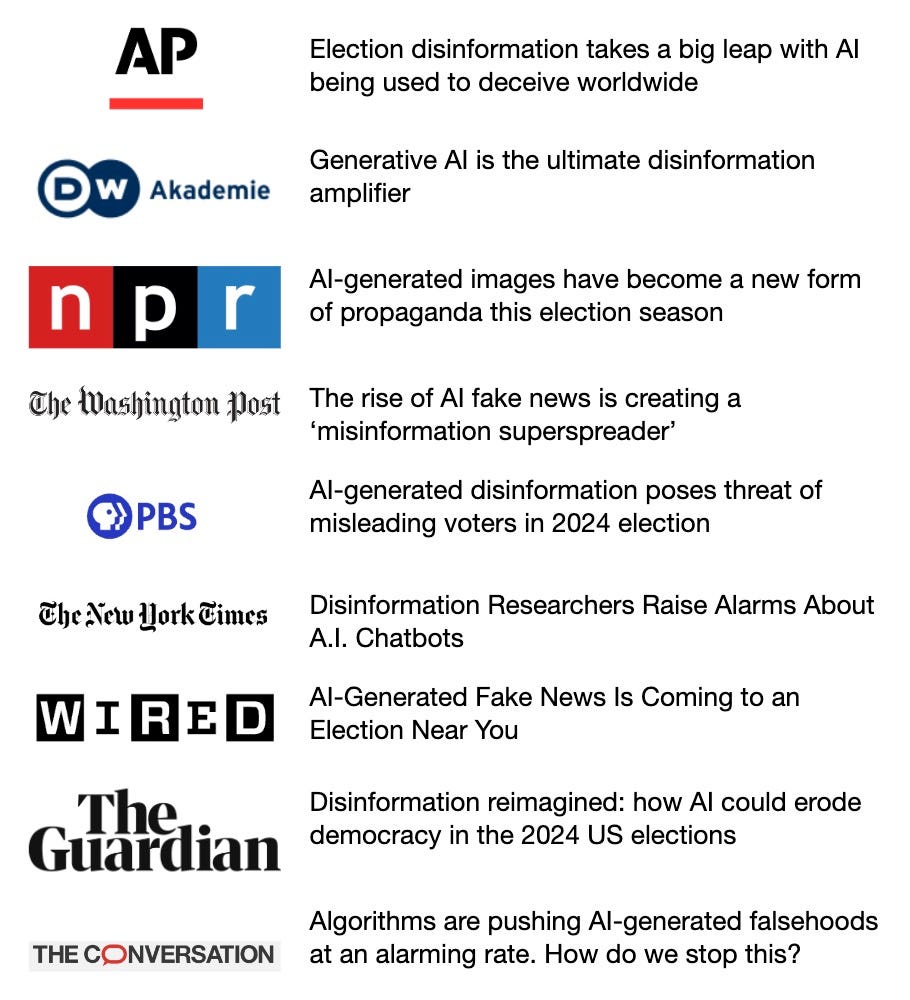

AI-generated misinformation was one of the top concerns during the 2024 U.S. presidential election. In January 2024, the World Economic Forum claimed that “misinformation and disinformation is the most severe short-term risk the world faces” and that “AI is amplifying manipulated and distorted information that could destabilize societies.” News headlines about elections in 2024 tell a similar story:

In contrast, in our past writing, we predicted that AI would not lead to a misinformation apocalypse. When Meta released its open-weight large language model (called LLaMA), we argued that it would not lead to a tidal wave of misinformation. And in a follow-up essay, we pointed out that the distribution of misinformation is the key bottleneck for influence operations, and while generative AI reduces the cost of creating misinformation, it does not reduce the cost of distributing it. A few other researchers have made similar arguments.

Which of these two perspectives better fits the facts?

Fortunately, we have the evidence of AI use in elections that took place around the globe in 2024 to help answer this question. Many news outlets and research projects have compiled known instances of AI-generated text and media and their impact. Instead of speculating about AI’s potential, we can look at its real-world impact to date.

We analyzed every instance of AI use in elections collected by the WIRED AI Elections Project, which tracked known uses of AI for creating political content during elections taking place in 2024 worldwide. In each case, we identified what AI was used for and estimated the cost of creating similar content without AI.

We find that (1) half of AI use isn't deceptive, (2) deceptive content produced using AI is nevertheless cheap to replicate without AI, and (3) focusing on the demand for misinformation rather than the supply is a much more effective way to diagnose problems and identify interventions.

To be clear, AI-generated synthetic content poses many real dangers: the creation of non-consensual images of people and child sexual abuse material and the enabling of the liar’s dividend, which allows those in power to brush away real but embarrassing or controversial media content about them as AI-generated. These are all important challenges. This essay is focused on a different problem: political misinformation.1

Improving the information environment is a difficult and ongoing challenge. It’s understandable why people might think AI is making the problem worse: AI

...This excerpt is provided for preview purposes. Full article content is available on the original publication.