Choosing a GGUF Model: K-Quants, I-Quants, and Legacy Formats

For local LLM inference, the GGUF format, introduced by llama.cpp and popularized by frontends like Ollama, is by far the most common choice.

Each major LLM release is quickly followed by a wave of community GGUF conversions on the Hugging Face Hub. Prominent curators include Unsloth and Bartowski (also: TheBloke remains widely used), among many others. Repos often provide dozens of variants per model tuned for different memory/quality trade-offs.

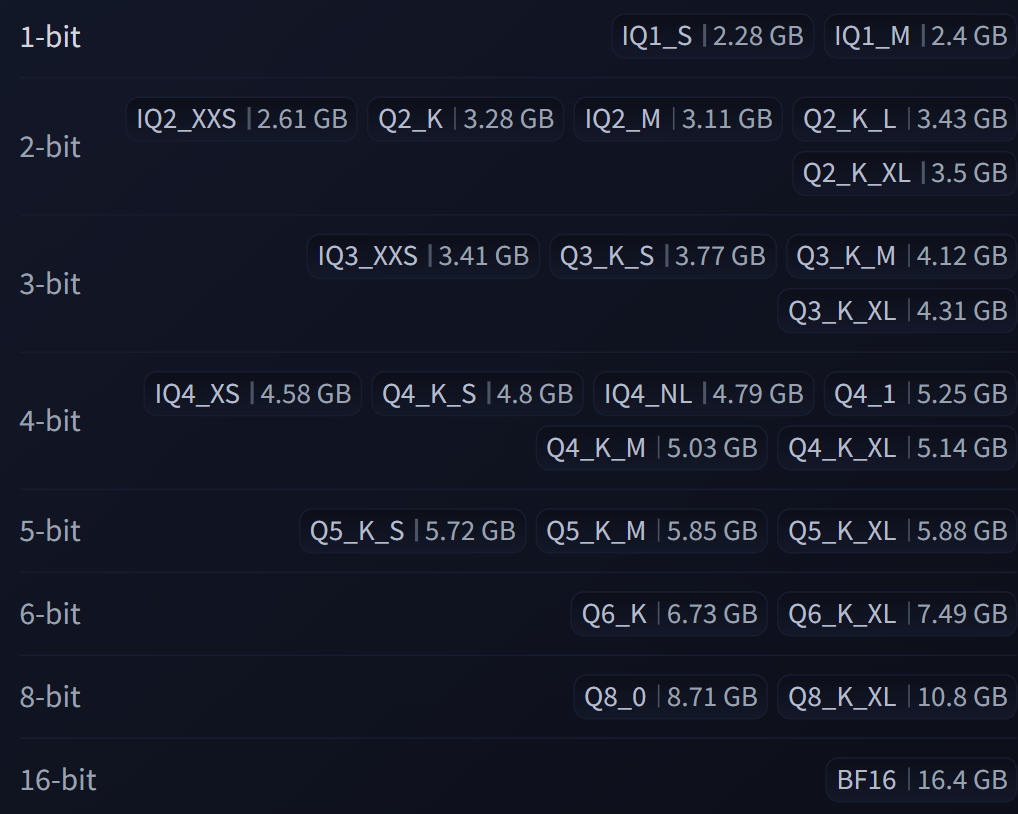

For instance, Unsloth released 25 GGUF versions of Qwen3 8B and 26 versions for DeepSeek-V3.1-Terminus.

That’s a lot of choice, but beyond filename and size, there’s rarely a clear guide to accuracy, speed, or trade-offs for each format. New variants land regularly, so I wrote this guide to demystify the main GGUF-serializable formats across architectures: how they work, why their accuracy/size/throughput differ, and when to pick each one. (This guide doesn’t cover converting your own models; I’ve written about that separately.)

“GGUF Quantization”

I introduced GGUF in this article:

TL;DR

Most GGUF weight formats are blockwise.

A matrix is split into fixed-size blocks, each block is represented with compact integer parameters, and a small set of per-block parameters reconstructs approximate floating weights at inference.

The design space is defined by three choices:

The number of bits used for the parameters

The block size

The dequantization rule (linear scale and zero-point, multi-scale hierarchies, or non-linear/LUT-assisted schemes)

The more expressive the dequantization rule, the lower the error you can achieve for the same number of bits, at some decode cost.

In the next sections, “bits/weight” refers to the effective average once overheads like block scales are included. Values are approximate and vary a little by implementation and tensor shape, but they are useful for thinking about trade-offs.

Legacy Formats: Q_0 and Q_1

The legacy family of GGUF formats, Q4_0, Q4_1, Q5_0, Q5_1, Q8_0, implements classic per-block linear quantization. A block stores n-bit weight codes and either one scale (the “_0” variants, symmetric) or one scale plus one offset/zero-point (the “_1” variants, asymmetric). Dequantization is a single affine transform per block.

These formats are simple to decode and therefore fast. Their weakness is representational: one affine map per block cannot model skewed or heavy-tailed weight distributions as well as newer schemes.

At 8-bit, the difference is negligible, and Q8_0 is effectively near-lossless for most LLMs. That’s why we can still see a lot

...This excerpt is provided for preview purposes. Full article content is available on the original publication.