Right-Sized AI Infrastructure. Marvell XPUs

Ready to study Marvell’s datacenter business?

Marvell tends to zoom in and talk about the components that power said AI datacenters, namely “XPUs and XPU attach”. And we’ll talk about those.

But first, let’s zoom out.

Marvell helps hyperscalers develop custom AI datacenters.

While “custom datacenters” might sound niche, pretty much all AI datacenters are custom. And of course, the AI datacenter TAM is insane.

But I thought the vast majority of the market uses Nvidia GPUs with NVLink and Infiniband or Spectrum-X… so what do you mean by custom? Seems like mostly all Nvidia…

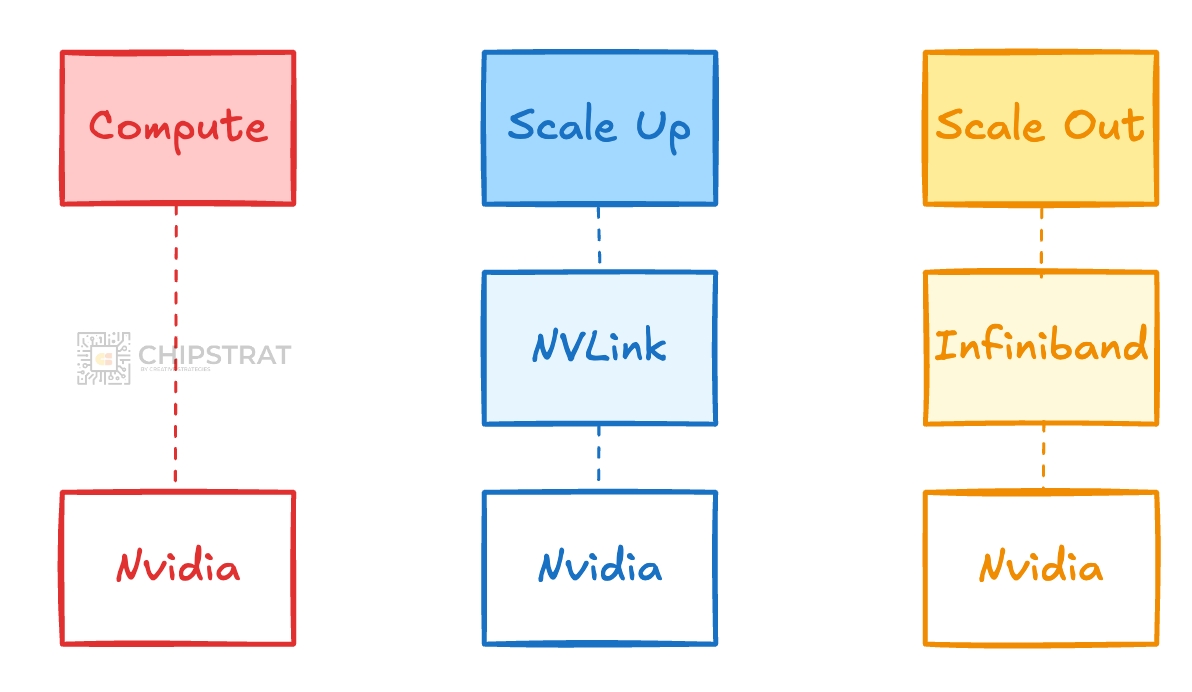

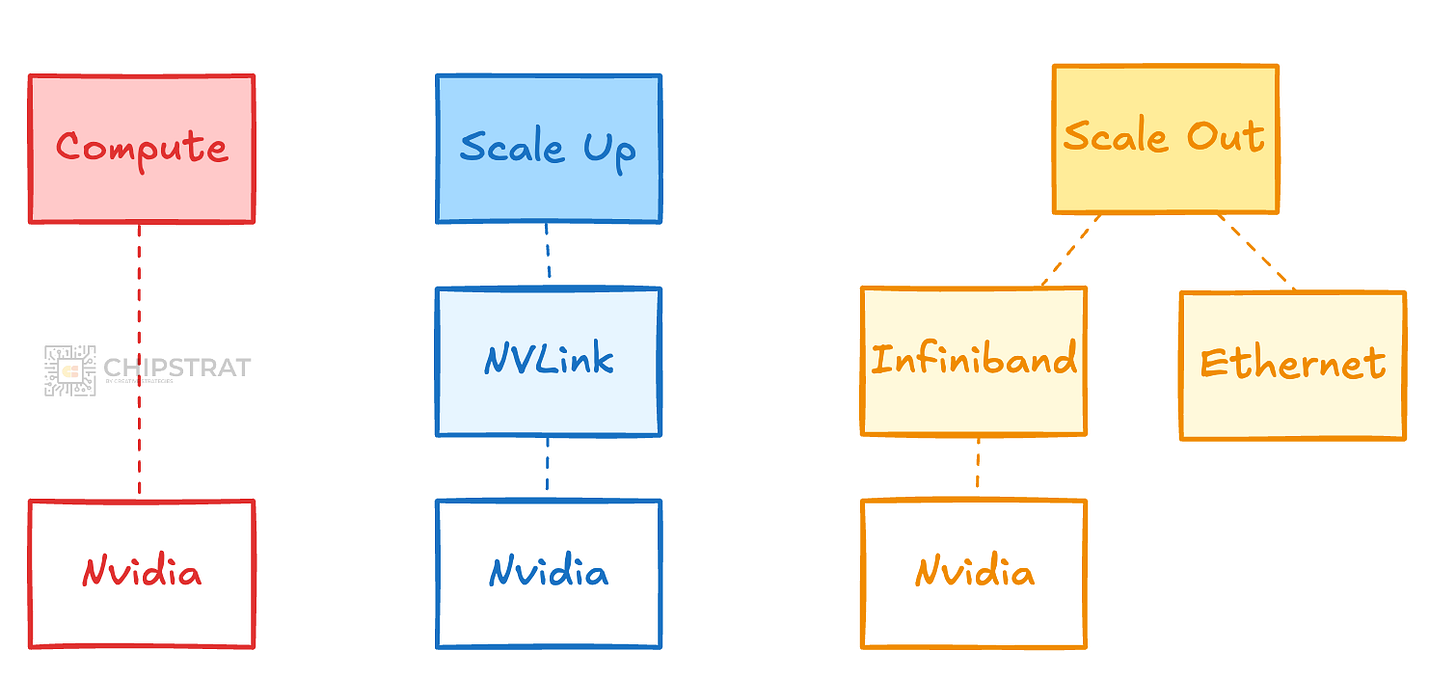

Recall that AI clusters are made up of many components beyond just the compute (GPU/XPU). They also include networking (scale-up and scale-out), storage, and software. And yes, while many AI clusters share similar components, the resulting system configuration is often distinct.

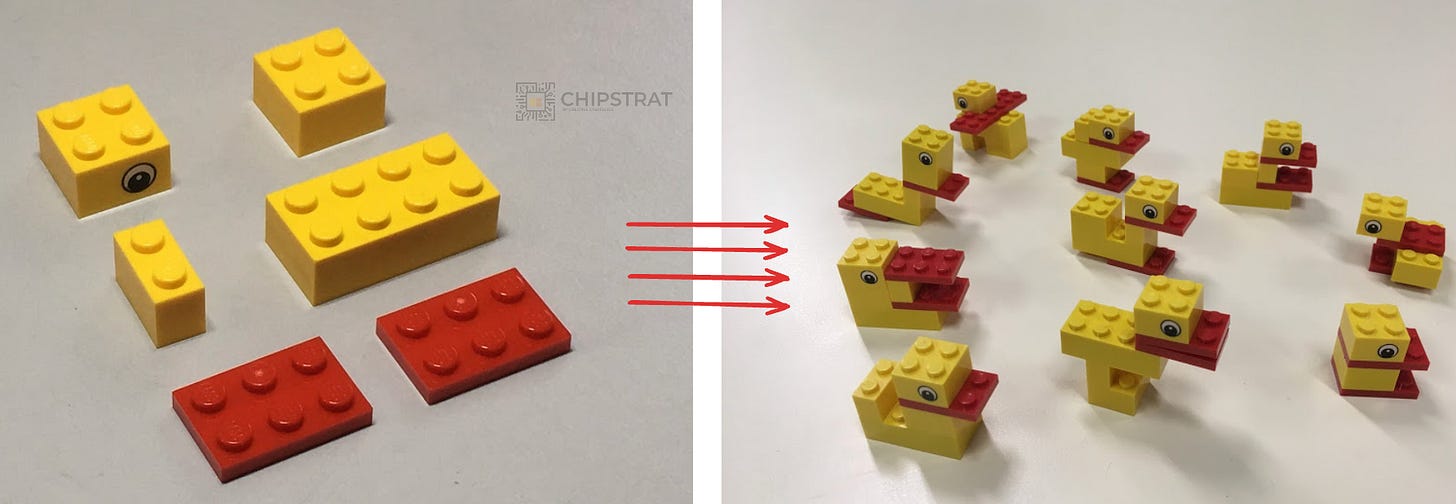

And just like the image above, each hyperscaler needs to choose the right building blocks and tune the AI cluster to meet its specific needs. A lot of the tuning can be done in software, but some workloads place specific demands on the underlying hardware configurations.

And sometimes the existing Lego blocks don’t quite meet the hyperscalers specific needs. Which makes the case for designing the Lego blocks you need; i.e. working with a company like Marvell or Broadcom to make custom silicon for your datacenter.

But first, lets take a step back and look at the evolution of the merchant silicon offerings.

From the earliest days, new Lego blocks started to emerge that let AI datacenter designers manage trade-offs and tune for specific workloads.

AI Datacenter Diversity

Early in the AI datacenter game, the compute option was largely standardized (e.g. Nvidia Ada/Hopper/Blackwell), and the scale-up network was too (NVLink). So it kind of felt like all roads led to Nvidia.

But, for some time now, the scale-out infrastructure has been split between Infiniband and Ethernet, and increasingly so. (More here).

You can start to see the increase in Lego blocks…

Scale Out Networking Diversity

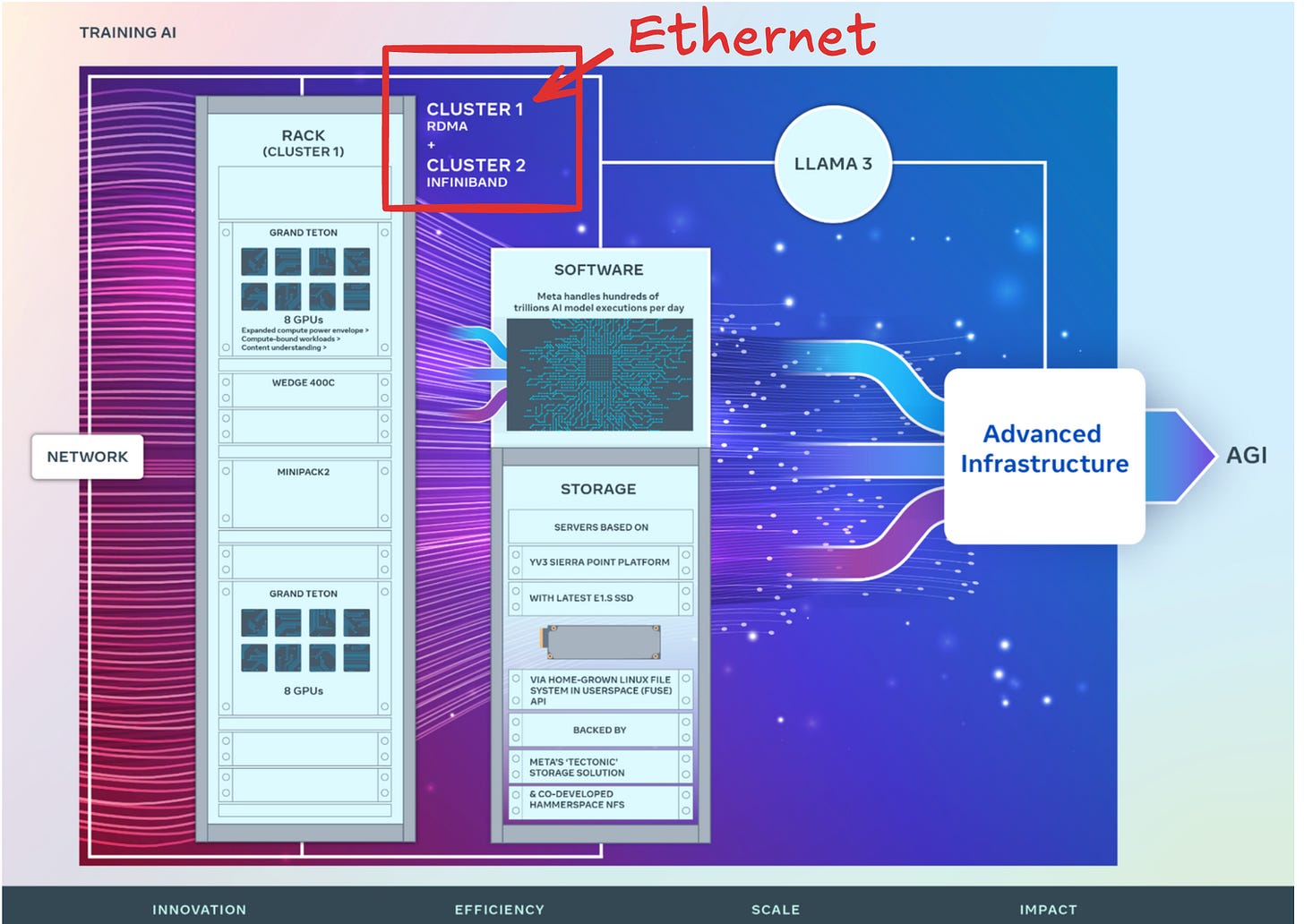

Here’s an example from Meta to illustrate.

Meta basically A/B tested two AI training clusters. Many components are the same, but the scale-out fabric was different; one with Ethernet and the other Infiniband.

BTW, Meta nicely described the custom nature of their infra tuned to meet Meta’s specific needs:

...At Meta, we

This excerpt is provided for preview purposes. Full article content is available on the original publication.