Notes on RNJ-1, K2-V2, Devstral 2, and GLM-4.6V

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Transformer (deep learning)

15 min read

The article repeatedly references transformer architecture, attention mechanisms, RoPE, and architectural choices. A deep understanding of the original Transformer architecture helps readers appreciate the design decisions discussed for each model.

-

Reinforcement learning from human feedback

13 min read

The article discusses how RNJ-1 notably skipped RL/DPO training, references GRPO and RLVR methods, and mentions the 3-effort training approach for K2-V2. Understanding RLHF provides essential context for why these training decisions matter.

Hi everyone,

In this edition of The Weekly Kaitchup, I’ll review a subset of the models released over the past two weeks. The list is not exhaustive: I’ll only cover models I’ve actually tested, and I’ll exclude Ministral 3, which I briefly discussed last week and for which I’m preparing a dedicated article.

RNJ-1

Currently trending #1 on the HF hub in the “text generation” category, RNJ-1 was released by Essential AI, led by Ashish Vaswani (first author of Attention Is All You Need, the Transformer paper).

It’s an 8.3B decoder-only dense model with a very conventional design. It’s based on the Gemma architecture, but trained from scratch: 32 transformer blocks, rotary position embeddings, RMSNorm, and a gated MLP. Attention uses grouped-query attention. There are no architectural tricks. It also has a more common vocabulary size of 128k entries (against 262k for Gemma 3).

Pretraining was done with an 8k context window on a mixed corpus that leans toward code and STEM material. The optimizer is Muon (the new standard, it seems) with: warmup, flat phase, then cosine decay, all at fairly high global batch sizes. After this, the model is pushed through a mid-training phase that extends the context to 32k using a YaRN-style scheme and reinforces behaviour on longer sequences. On top of that, they did a sizeable supervised fine-tuning stage, again biased toward code, math, and structured QA. Again, everything looks very normal.

Where it’s different is that they stopped there. No fancy RL or DPO.

Because the SFT layer is not especially “heavy” in the alignment sense, RNJ-1-Instruct behaves like a usable chat model but still exposes the underlying base distribution enough that further fine-tuning is straightforward.

In practice, it handles typical code and math benchmarks at the level you’d expect for a well-trained 8B model with that data profile. The main reason to care, if you are an implementer, is that you get a dense 8B backbone with 32k context and a fully specified training recipe that you can easily fine-tune.

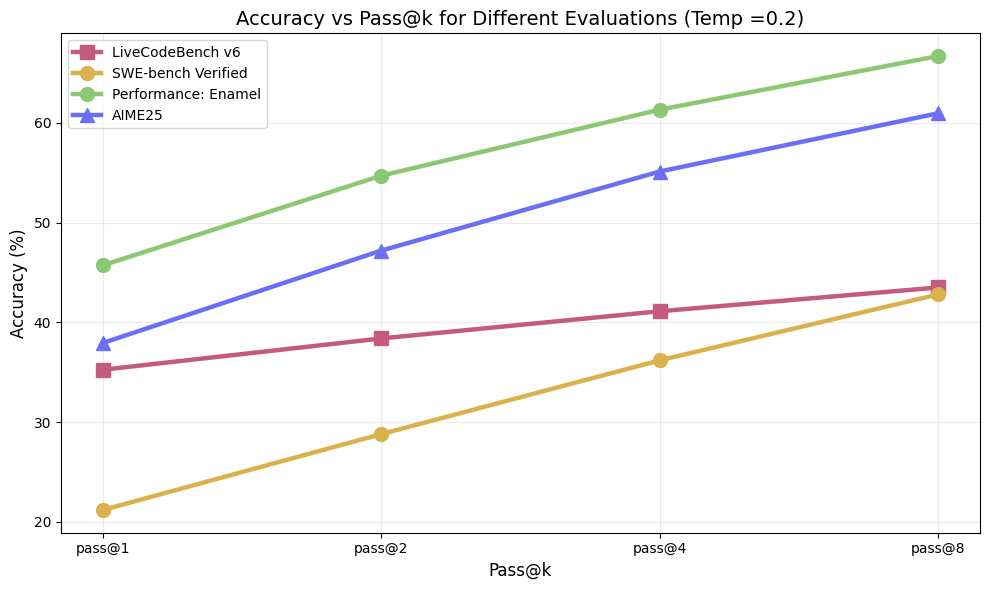

They also show this on the model card:

This “accuracy vs pass@k” shows that the model can find a better answer if given more tries. Good pass@k indicates that the model is a good target for RLVR methods like GRPO.

However, nothing is surprising here. Most recent models, not trained with RL, have a very similar pass@k behavior. Here are my

...This excerpt is provided for preview purposes. Full article content is available on the original publication.