AI leaderboards are no longer useful. It's time to switch to Pareto curves.

By Sayash Kapoor, Benedikt Stroebl, Arvind Narayanan

Which is the most accurate AI system for generating code? Surprisingly, there isn’t currently a good way to answer questions like these.

Based on HumanEval, a widely used benchmark for code generation, the most accurate publicly available system is LDB (short for LLM debugger).1 But there’s a catch. The most accurate generative AI systems, including LDB, tend to be agents,2 which repeatedly invoke language models like GPT-4. That means they can be orders of magnitude more costly to run than the models themselves (which are already pretty costly). If we eke out a 2% accuracy improvement for 100x the cost, is that really better?

In this post, we argue that:

AI agent accuracy measurements that don’t control for cost aren’t useful.

Pareto curves can help visualize the accuracy-cost tradeoff.

Current state-of-the-art agent architectures are complex and costly but no more accurate than extremely simple baseline agents that cost 50x less in some cases.

Proxies for cost such as parameter count are misleading if the goal is to identify the best system for a given task. We should directly measure dollar costs instead.

Published agent evaluations are difficult to reproduce because of a lack of standardization and questionable, undocumented evaluation methods in some cases.

Maximizing accuracy can lead to unbounded cost

LLMs are stochastic. Simply calling a model many times and outputting the most common answer can increase accuracy.

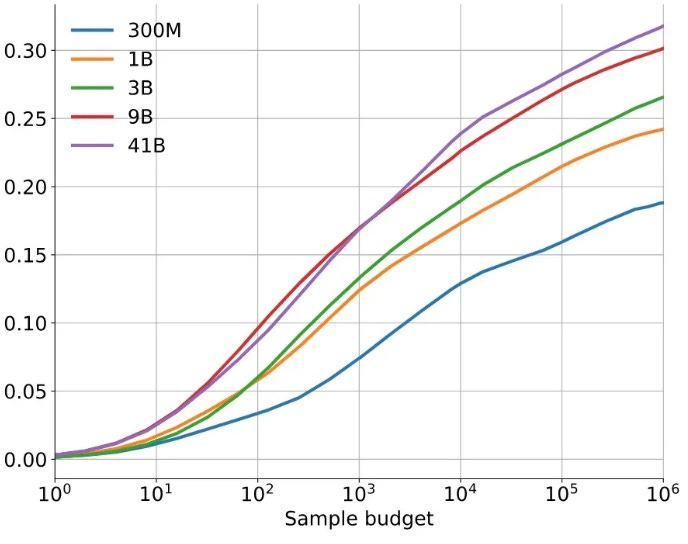

On some tasks, there is seemingly no limit to the amount of inference compute that can improve accuracy.3 Google Deepmind's AlphaCode, which improved accuracy on automated coding evaluations, showed that this trend holds even when calling LLMs millions of times.

A useful evaluation of agents must therefore ask: What did it cost? If we don’t do cost-controlled comparisons, it will encourage researchers to develop extremely costly agents just to claim they topped the leaderboard.

In fact, when we evaluate agents that have been proposed in the last year for solving coding tasks, we find that visualizing the tradeoff between cost and accuracy yields surprising insights.

Visualizing the accuracy-cost tradeoff on HumanEval, with new baselines

We

...This excerpt is provided for preview purposes. Full article content is available on the original publication.