Efficient LLMs at Scale: My NeurIPS Week in KV Caches, Spec Decoding, and FP4

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Attention (machine learning)

12 min read

The article extensively discusses KV cache compression and attention mechanisms as the core bottleneck in LLM inference. Understanding the transformer attention mechanism's mathematical foundations would help readers grasp why storing key-value pairs is necessary and why compression techniques work.

-

Quantization (signal processing)

13 min read

The article covers FP4 (4-bit floating point) training as a breakthrough topic at NeurIPS. Understanding quantization theory—how continuous values are mapped to discrete representations and the inherent tradeoffs—provides essential background for why reducing from 16-bit to 4-bit precision is both challenging and impactful.

One week after getting back from NeurIPS in San Diego, my full report is here. I had a lot to process and write up.

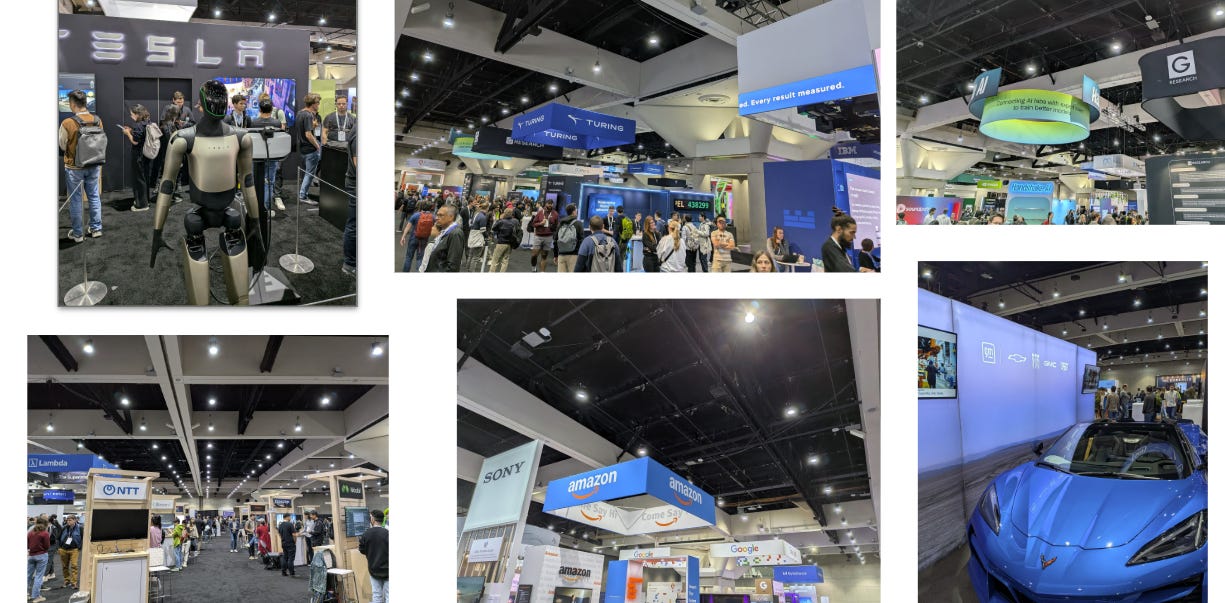

If you’re not familiar with it, NeurIPS has been the flagship conference for AI research for years, with tracks on deep learning, optimization, theory, and a growing number of applications. Ten years ago, it already felt massive with around 4,000 participants. This year, the organizers announced more than 29,000 registrations (including virtual attendees, and 500 people attending from the Mexico satellite venue,1 but not counting on-site late registrations). I’ve been to dozens of research conferences in my life, and I’ve never seen anything close to this scale.

Downtown San Diego, especially the “historic” Gaslamp district, was completely taken over. Every hotel lobby, bar, and restaurant seemed to be hosting some variation of the same conversation about LLMs and agents. French and US border agents at airports had the same remarks: “Are you going to THIS conference?”. I’m sure the local businesses did very well. It felt like every second table was a mini-NeurIPS in itself.

In this article, I want to share what I took away from the week, not a complete overview of NeurIPS, it’s impossible, but a view from my corner of it. My work is mostly about adapting LLMs: fine-tuning, quantization, evaluation, and efficient inference. So that’s where I naturally gravitated: the sessions, posters, and hallway conversations on scaling, compression, and inference tricks. I spent (almost) no time in the more theoretical or classical computer vision tracks.2 There was also a “Machine Learning” track that I didn’t visit.

I’ll structure this report around a few themes:

Highlights around three topics

KV Cache: The Enemy Number One

Speculative Decoding with Trees

FP4 at Training Time: Four Bits Are (Almost) Enough

Good paper special mentions:

How to do AdamW with a batch size of 1

How leaderboards are gamed

Comments on Yejin Choi’s keynote, which perfectly captured the year

How to survive (and enjoy) a conference as big as NeurIPS

One important disclaimer: the official hot topic of this year was clearly reinforcement learning. But you won’t find much RL in this write-up. That’s partly practical: the RL area was packed, and having the kind of in-depth discussions I’d want there would have eaten most of my conference time. It’s also because I’ve already read and played with many of the RL

...This excerpt is provided for preview purposes. Full article content is available on the original publication.