LLM Research Papers: The 2024 List

It’s been a very eventful and exciting year in AI research. This is especially true if you are interested in LLMs.

I had big plans for this December edition and was planning to publish a new article with a discussion of all my research highlights from 2024. I still plan to do so, but due to an accident and serious injury, I am currently unable to work at a computer and finish the draft. But I hope to recover in the upcoming weeks and be back on my feet soon.

In the meantime, I want to share my running bookmark list of many fascinating (mostly LLM-related) papers I stumbled upon in 2024. It’s just a list, but maybe it will come in handy for those who are interested in finding some gems to read for the holidays.

And if you are interested in more code-heavy reading and tinkering, My Build A Large Language Model (From Scratch) book is out on Amazon as of last month.

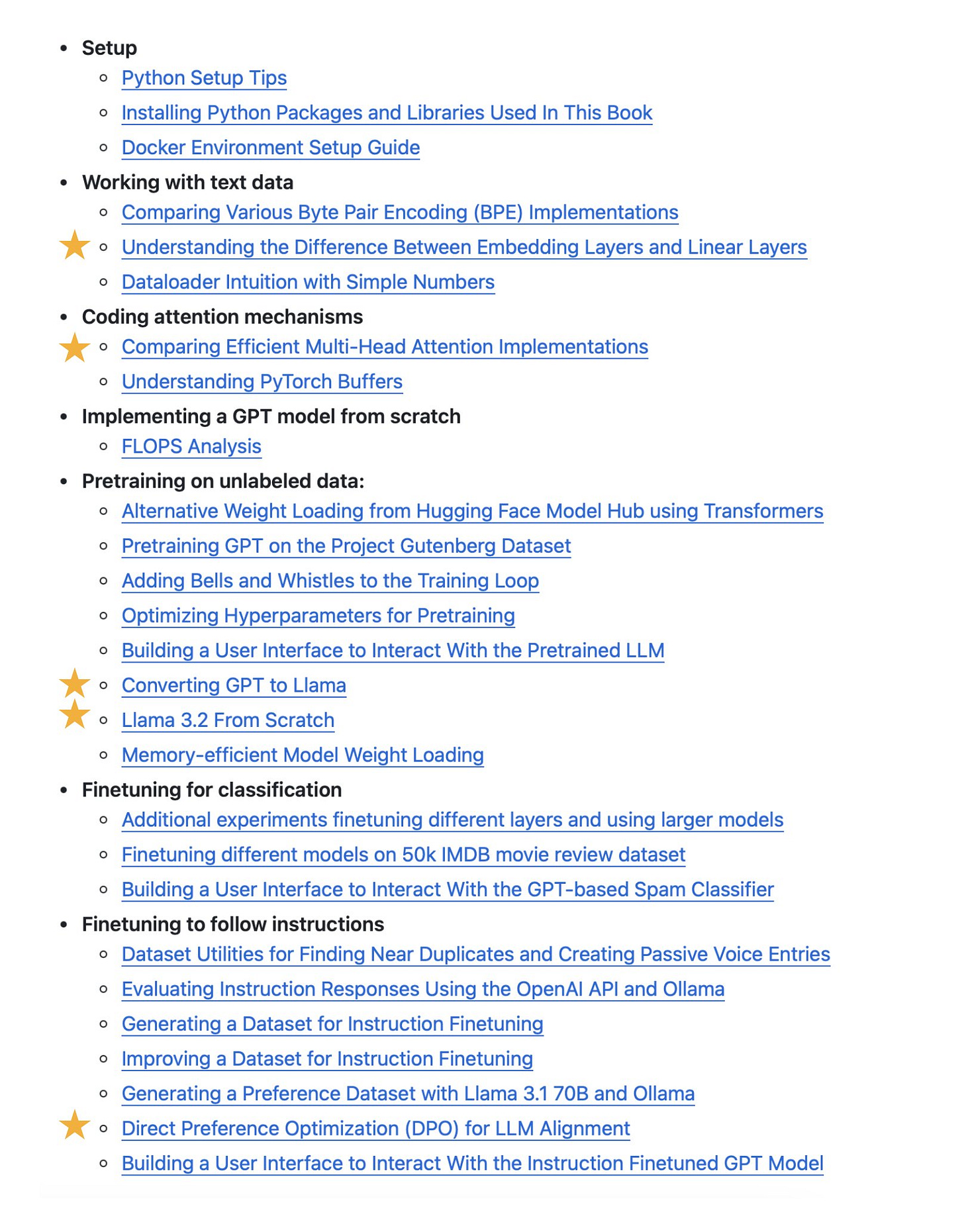

In addition, I added a lot of bonus materials to the GitHub repository.

Thanks for your understanding and support, and I hope to make a full recovery soon and be back with the Research Highlights 2024 article in a few weeks!

January 2024

1 Jan, Astraios: Parameter-Efficient Instruction Tuning Code Large Language Models, https://arxiv.org/abs/2401.00788

2 Jan, A Comprehensive Study of Knowledge Editing for Large Language Models, https://arxiv.org/abs/2401.01286

2 Jan, LLM Maybe LongLM: Self-Extend LLM Context Window Without Tuning, https://arxiv.org/abs/2401.01325

2 Jan, Self-Play Fine-Tuning Converts Weak Language Models to Strong Language Models, https://arxiv.org/abs/2401.01335

2 Jan, LLaMA Beyond English: An Empirical Study on Language Capability Transfer, https://arxiv.org/abs/2401.01055

3 Jan, A Mechanistic Understanding of Alignment Algorithms: A Case Study on DPO and Toxicity, https://arxiv.org/abs/2401.01967

4 Jan, LLaMA Pro: Progressive LLaMA with Block Expansion, https://arxiv.org/abs/2401.02415

4 Jan, LLM Augmented LLMs: Expanding Capabilities through Composition, https://arxiv.org/abs/2401.02412

4 Jan, Blending Is All You Need: Cheaper, Better Alternative to Trillion-Parameters LLM, https://arxiv.org/abs/2401.02994

5 Jan, DeepSeek LLM: Scaling Open-Source Language Models with Longtermism, https://arxiv.org/abs/2401.02954

5 Jan, Denoising Vision Transformers, https://arxiv.org/abs/2401.02957

7 Jan, Soaring from 4K to 400K: Extending LLM’s Context with Activation Beacon, https://arxiv.org/abs/2401.03462

8 Jan, Mixtral of Experts, https://arxiv.org/abs/2401.04088

8 Jan, MoE-Mamba: Efficient Selective State Space Models with Mixture of Experts, https://arxiv.org/abs/2401.04081

8 Jan, A Minimaximalist

This excerpt is provided for preview purposes. Full article content is available on the original publication.