LLM Research Papers: The 2025 List (January to June)

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Reinforcement learning from human feedback

13 min read

The article heavily discusses reinforcement learning methods for training reasoning models, particularly 'reinforcement learning with verifiable rewards.' RLHF is the foundational technique that enabled modern LLM alignment and reasoning capabilities, making it essential context for understanding the DeepSeek-R1 and similar papers mentioned.

-

Dual process theory

15 min read

The article references 'System 2 Reasoning' in the paper title 'Towards System 2 Reasoning in LLMs.' This refers to Kahneman's dual process theory distinguishing fast intuitive thinking (System 1) from slow deliberate reasoning (System 2), which is the psychological foundation for chain-of-thought and reasoning model research.

-

Markov decision process

14 min read

MDPs are the mathematical framework underlying all reinforcement learning approaches discussed throughout the article. Understanding MDPs—states, actions, rewards, and policies—provides the theoretical foundation for comprehending how reasoning models are trained via RL methods like those in the cited papers.

As some of you know, I keep a running list of research papers I (want to) read and reference.

About six months ago, I shared my 2024 list, which many readers found useful. So, I was thinking about doing this again. However, this time, I am incorporating that one piece of feedback kept coming up: "Can you organize the papers by topic instead of date?"

The categories I came up with are:

Reasoning Models

- 1a. Training Reasoning Models

- 1b. Inference-Time Reasoning Strategies

- 1c. Evaluating LLMs and/or Understanding Reasoning

Other Reinforcement Learning Methods for LLMs

Other Inference-Time Scaling Methods

Efficient Training & Architectures

Diffusion-Based Language Models

Multimodal & Vision-Language Models

Data & Pre-training Datasets

Also, as LLM research continues to be shared at a rapid pace, I have decided to break the list into bi-yearly updates. This way, the list stays digestible, timely, and hopefully useful for anyone looking for solid summer reading material.

Please note that this is just a curated list for now. In future articles, I plan to revisit and discuss some of the more interesting or impactful papers in larger topic-specific write-ups. Stay tuned!

Announcement:

It's summer! And that means internship season, tech interviews, and lots of learning.

To support those brushing up on intermediate to advanced machine learning and AI topics, I have made all 30 chapters of my Machine Learning Q and AI book freely available for the summer:

🔗 https://sebastianraschka.com/books/ml-q-and-ai/#table-of-contents

Whether you are just curious and want to learn something new or prepping for interviews, hopefully this comes in handy.

Happy reading, and best of luck if you are interviewing!

1. Reasoning Models

This year, my list is very reasoning model-heavy. So, I decided to subdivide it into 3 categories: Training, inference-time scaling, and more general understanding/evaluation.

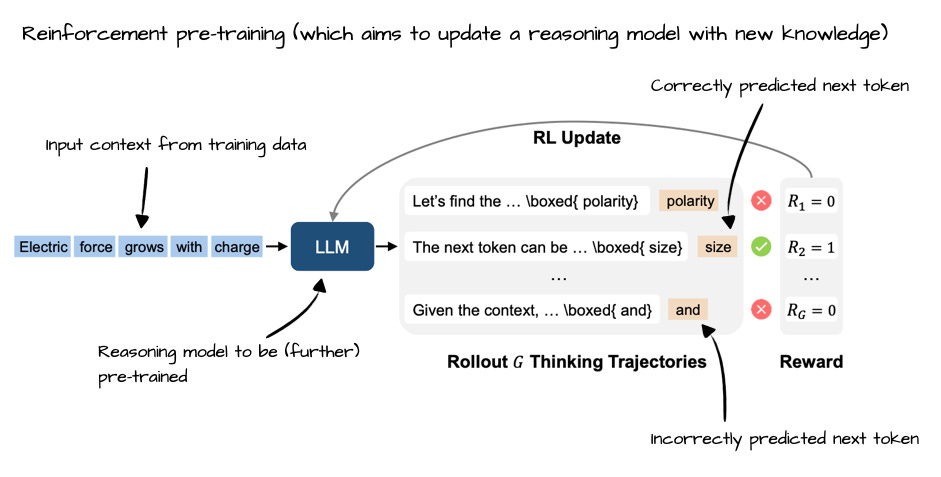

1a. Training Reasoning Models

This subsection focuses on training strategies specifically designed to improve reasoning abilities in LLMs. As you may see, much of the recent progress has centered around reinforcement learning (with verifiable rewards), which I covered in more detail in a previous article.

8 Jan, Towards System 2 Reasoning in LLMs: Learning How to Think With Meta Chain-of-Thought, https://arxiv.org/abs/2501.04682

13 Jan, The Lessons of Developing Process Reward Models in Mathematical Reasoning, https://arxiv.org/abs/2501.07301

16 Jan, Towards Large Reasoning Models: A Survey of Reinforced Reasoning with Large Language Models, https://arxiv.org/abs/2501.09686

20 Jan, Reasoning Language Models: A Blueprint,

This excerpt is provided for preview purposes. Full article content is available on the original publication.