LLM Hallucinations Are Still Absolutely Nuts

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Hallucination (artificial intelligence)

10 min read

Core concept of the entire article - the piece is fundamentally about LLM hallucinations, providing a detailed case study of Google Gemini fabricating elaborate false details about Connecticut Valley Hospital. Understanding the technical phenomenon enhances appreciation of the examples.

-

Catch-22 (logic)

13 min read

The author explicitly questions whether Gemini's generated scenario constitutes a Catch-22, referencing this specific logical paradox. Understanding the formal definition of paradoxical situations with no escape due to contradictory rules enriches the critique of the AI's reasoning.

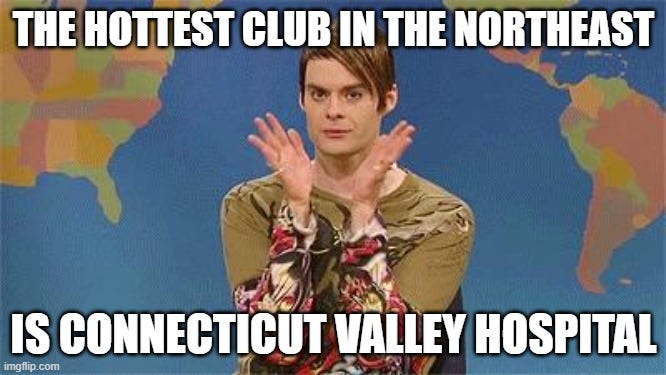

A couple months back, a grad student reached out to me to see if I could help with some research they were doing. (I’m keeping things vague for reasons that will be apparent.) They had heard that I had previously been a patient at Connecticut Valley Hospital. I told them that I would be happy to do my best with whatever questions they might have, but that I hadn’t been there in 20 years and my memories were dimmed by the weight of years, psychosis, and psychiatric medications. But, sure - I would tell them what I knew. I answered questions about my experiences there, mainly focusing on how I came to be admitted, my legal status on my first go round, the wards I had principally been in, and my release. We exchanged email for awhile and that was that.

Then, just a few days before the Christmas holiday, they got back in touch with me. They had some more questions that they had not been able to solve via research. They asked me about several features of life “on campus” that I couldn’t answer, and I grew confused. One was about the “Vance Building.” I told them I wasn’t familiar with it, but that wasn’t unusual; CVH is a sprawling facility with lots of different buildings that in many ways operate as their own little worlds, and again, it’s been several decades since I was there. Then they asked me if I remembered “the club.” To which I replied, the… club?

A bit of confused back and forth went down, and eventually they sheepishly admitted that they had been doing some research through Google Gemini. They insisted (quite strenuously) that they would never have an LLM write any of their actual work for them - and, for the record, I believe them - but that they had tried to fill in gaps in information with AI. With my gentle pushback, they were now concerned that they had a few facts wrong. I asked them if they would copy and paste the Gemini output and send it to me and they did. And, hoo boy! Please note that Gemini is believed by many to be the most capable consumer LLM and that the grad student was using the “Thinking” mode for this. I was sent thousands of words of this stuff; I’m going to try and spare you by

...This excerpt is provided for preview purposes. Full article content is available on the original publication.