Mistral Large 3: Not a Reasoning Model

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Conference on Neural Information Processing Systems

11 min read

The article extensively discusses NeurIPS attendance growth and format changes. Understanding the history and significance of this flagship ML conference provides valuable context for why Mistral chose this venue for their release and the conference's evolution from 4k to 29k attendees.

-

Prompt engineering

13 min read

The core distinction the article makes between 'instruct' and 'reasoning' models hinges on chain-of-thought techniques. Understanding this prompting methodology explains why reasoning models generate 10x more tokens and why the author emphasizes this is 'not a reasoning model.'

-

Floating-point arithmetic

7 min read

The article discusses FP8 vs BF16 model variants and post-training quantization. Understanding floating-point precision helps readers grasp why these format choices matter for model behavior and why 'dequantized' BF16 versions might behave differently from native training.

Hi everyone,

In this edition of The Weekly Kaitchup, I briefly discuss Mistral AI’s new (and somewhat unusual) releases.

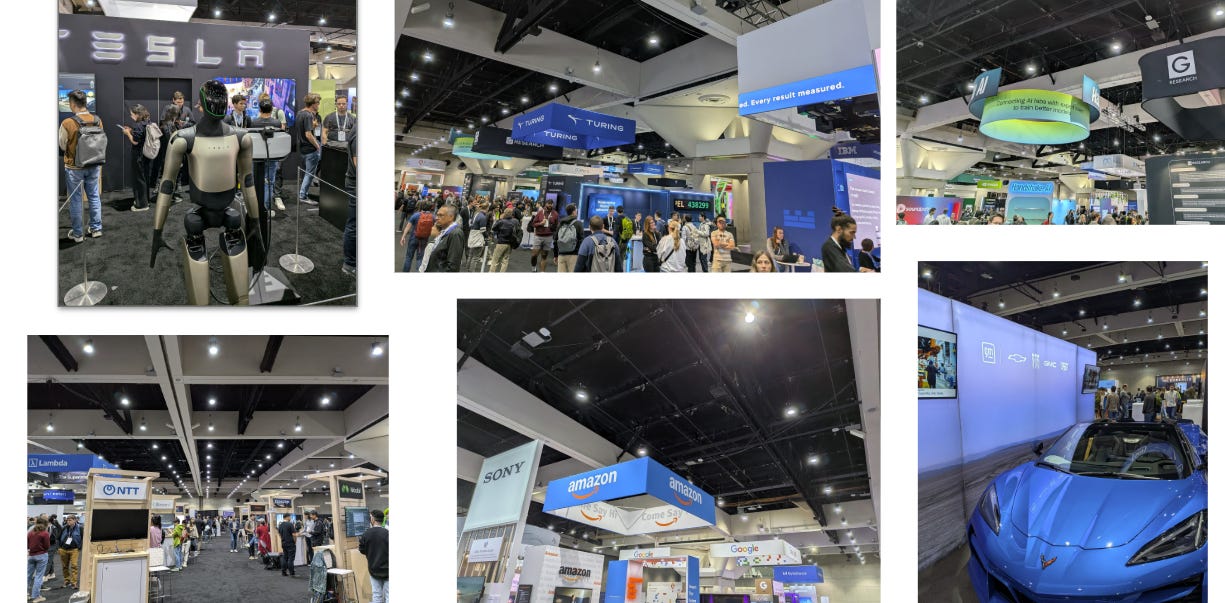

I’m currently at NeurIPS. There are about 29k attendees this year (including virtual), up 64% compared to last year. Ten years ago in Montreal, 4k participants already felt like a huge conference. At this pace, 100k people by 2030 doesn’t sound impossible.

This year, they tried to split the conference over three venues (San Diego, Mexico City, and Copenhagen), but unsurprisingly, most people want to be at the main site, in this case San Diego. Also: so many sponsors. It feels more and more like a trade expo!

Mistral Large 3: not a reasoning model

Mistral AI knows how to get attention: they released a large open model on the first day of NeurIPS. It worked. Everyone is talking about it, but many are missing a key point: Mistral Large 3 is not a reasoning model.

Despite this, I saw third-party evaluations placing it on the same charts as reasoning models, often flagged with a “reasoning” icon or bubble that is very easy to miss.

This is misleading. Mistral Large 3 is an instruct model, not a long-chain-of-thought “thinking” model. Quite unusual for a model of that size. Artificial Analysis confirms this: Mistral Large 3 generates 10 times fewer tokens than KIMI K2 Thinking and GPT-OSS 120B (reasoning_effort=”high”).

Why did Mistral AI only release an instruct version?

I can see several plausible reasons:

Positioning: It’s one of the only instruct models at that scale, so they may want to focus on making that variant broadly available and reliable before investing in a public reasoning counterpart.

Product strategy: They may already have a reasoning version but prefer to keep it private for their paid API customers rather than releasing it openly.

Demand: “Thinking” models are much less popular than instruct models. Hugging Face’s trending models page shows this clearly. Most users don’t want to wait minutes for each answer.

Ministral 3: base, reasoning, and instruct “small” models

Mistral AI also released the Ministral 3 family: multimodal models at 3B, 8B, and 14B.

These are more “regularly sized” models, but the release has a few unusual aspects:

No official multimodal benchmarks: The models are multimodal, but Mistral didn’t publish benchmark numbers. Evaluation is effectively delegated to the community.

Instruct = FP8 post-trained: The instruct models are available in

This excerpt is provided for preview purposes. Full article content is available on the original publication.