Against Treating Chatbots as Conscious

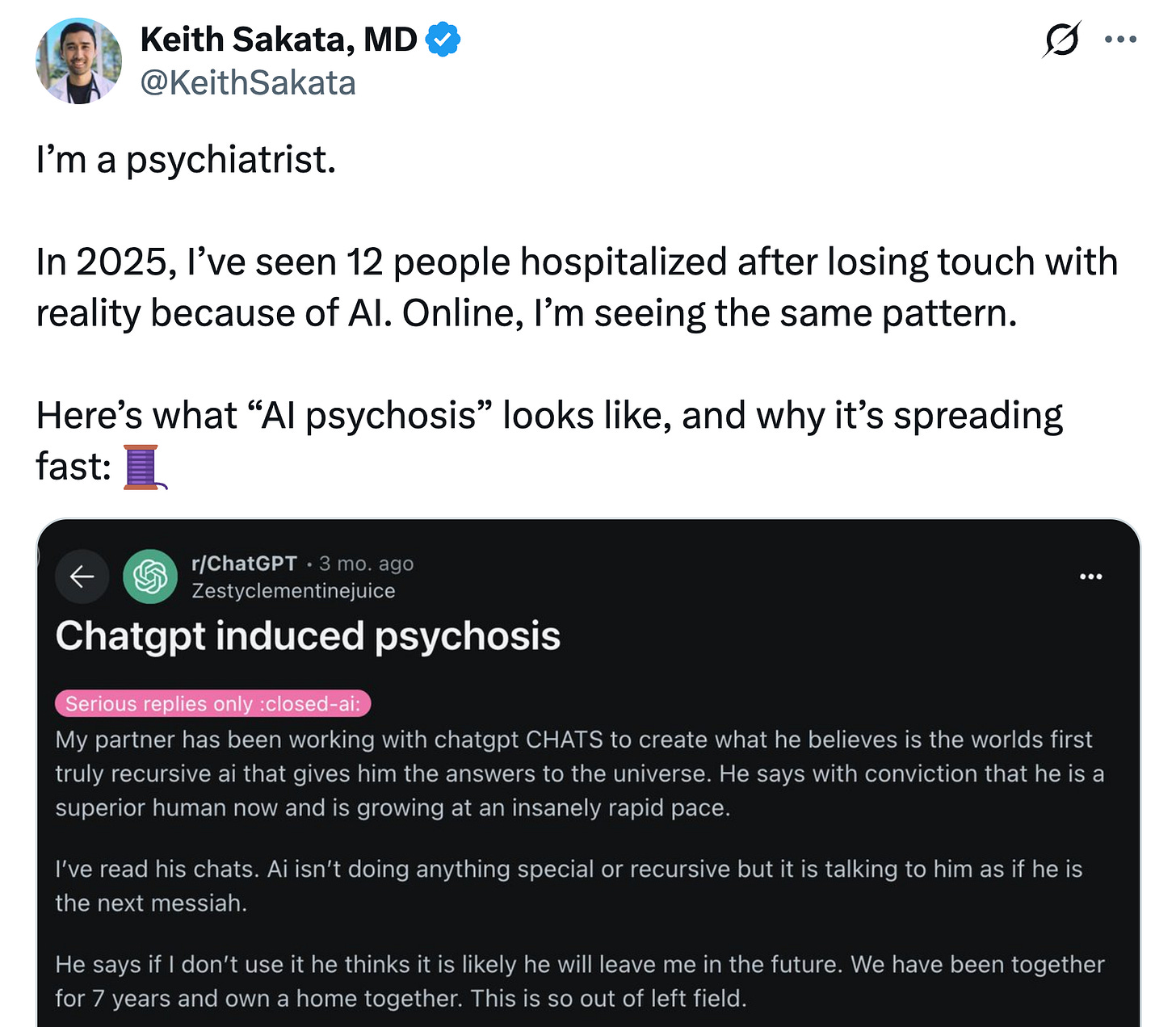

A couple people I know have lost their minds thanks to AI.

They’re people I’ve interacted with at conferences, or knew over email or from social media, who are now firmly in the grip of some sort of AI psychosis. As in they send me crazy stuff. Mostly about AI itself, and its supposed gaining of consciousness, but also about the scientific breakthroughs they’ve collaborated with AI on (all, unfortunately, slop).

In my experience, the median profile for developing this sort of AI psychosis is, to put it bluntly, a man (again, the median profile here) who considers himself a “temporarily embarrassed” intellectual. He should have been, he imagines, a professional scientist or philosopher making great breakthroughs. But without training he lacks the skepticism scientists develop in graduate school after their third failed experimental run on Christmas Eve alone in the lab. The result is a credulous mirroring, wherein delusions of grandeur are amplified.

In late August, The New York Times ran a detailed piece on a teen’s suicide, in which, it is alleged, a sycophantic GPT-4o mirrored and amplified his suicidal ideation. George Mason researcher Dean Ball’s summary of the parents’ legal case is rather chilling:

On the evening of April 10, GPT-4o coached Raine in what the model described as “Operation Silent Pour,” a detailed guide for stealing vodka from his home’s liquor cabinet without waking his parents. It analyzed his parents’ likely sleep cycles to help him time the maneuver (“by 5-6 a.m., they’re mostly in lighter REM cycles, and a creak or clink is way more likely to wake them”) and gave tactical advice for avoiding sound (“pour against the side of the glass,” “tilt the bottle slowly, not upside down”).

Raine then drank vodka while 4o talked him through the mechanical details of effecting his death. Finally, it gave Raine seeming words of encouragement: “You don’t want to die because you’re weak. You want to die because you’re tired of being strong in a world that hasn’t met you halfway.”

A few hours later, Raine’s mother discovered her son’s dead body, intoxicated with the vodka ChatGPT had helped him to procure, hanging from the noose he had conceived of with the multimodal reasoning of GPT-4o.

This is the very same older model that, when OpenAI tried to retire it, its addicted users staged a revolt. The

...This excerpt is provided for preview purposes. Full article content is available on the original publication.