Why Ads on ChatGPT Are More Terrifying Than You Think

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Enshittification

15 min read

Linked in the article (11 min read)

-

Common People (Black Mirror)

11 min read

Linked in the article (5 min read)

-

Native advertising

13 min read

The article extensively discusses how AI-generated ads will be woven seamlessly into content, citing a Sharethrough/IPG Media Labs study on native advertising effectiveness. Understanding the history, psychology, and regulatory landscape of native advertising provides crucial context for why AI-integrated ads are particularly concerning.

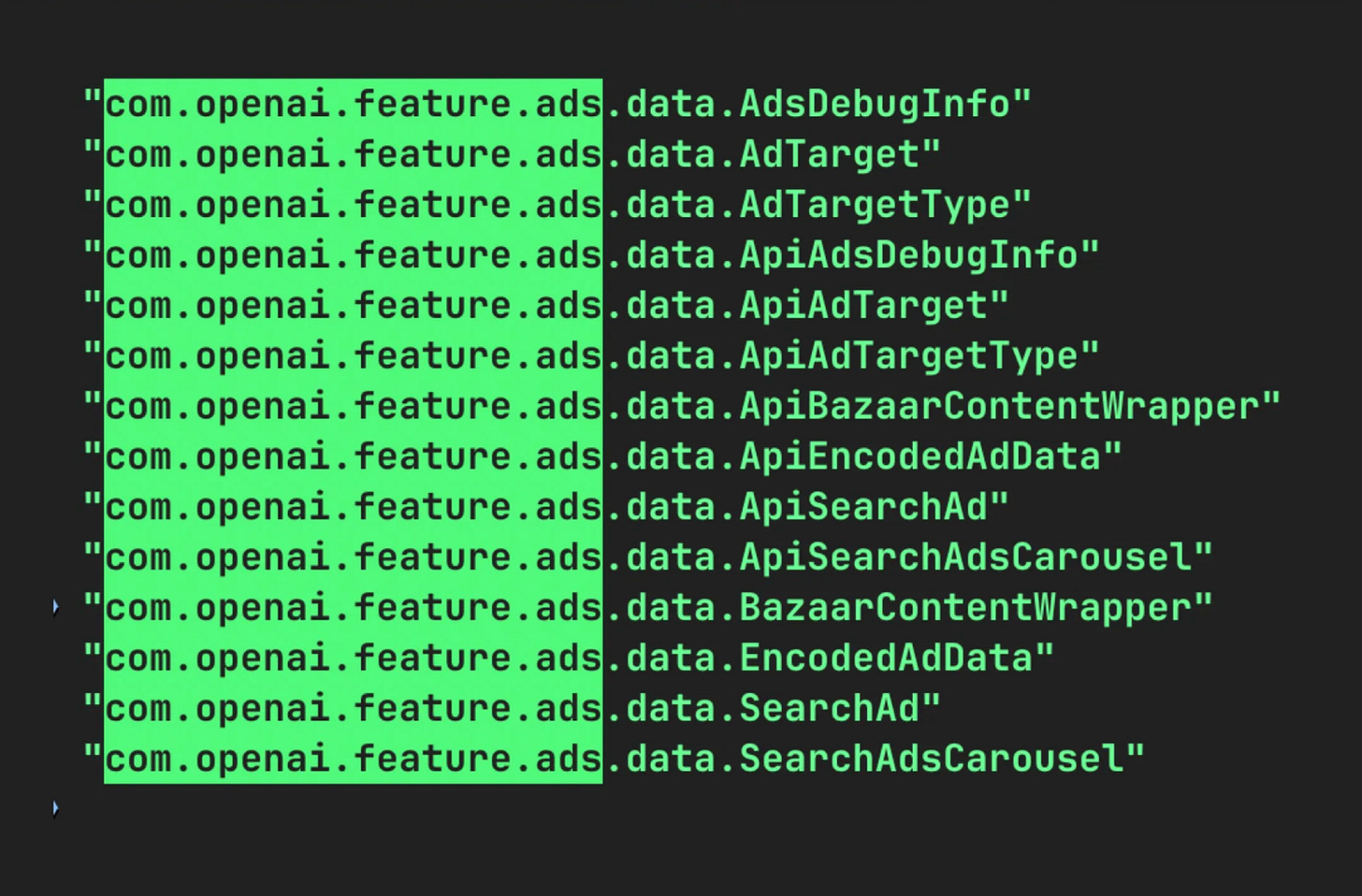

OpenAI’s silent confirmation that advertising is coming to ChatGPT (screenshot above) marks the end of the chatbot’s introductory phase and the beginning of a new nightmare for users. While the timing aligns with its third anniversary—happy birthday to you, I guess—framing this as a self-gift rather than a necessary structural pivot would be naive; OpenAI will sell ads, or it will die. (Last-minute news from The Information reveal that CEO Sam Altman is sufficiently worried about Google’s Gemini 3 that they’re considering delaying ads and similar initiatives in favor of improving the quality of the model.)

It is a bad sign that this is a forced move rather than a free choice, though: People would complain anyway if OpenAI wanted ad revenue to amass some profits, but that’s standard capitalism; the fact that OpenAI can’t afford the things it intends to build unless it enables ads suggests a fundamental flaw at the technological level: maybe large language models (LLMs) are not a good business (the financials don’t paint a pretty picture so far). If that’s the case, then this is not an OpenAI problem but an industry-wide catastrophe: whereas traditional search engines display ads because users want free information, chatbots will have ads because the costs are otherwise untenable.

The unit economics of LLMs have always been precarious; the cost of inference—the computing power required to generate a response, which is, accounting for everything, larger than the cost of training a new model when you serve 800 million people—remains high compared to web search because small-ish companies like OpenAI need to rent their compute to cloud companies like Microsoft; their operational expenses are sky-high! (cloud-high one might say). The twenty-dollar subscription tier (chatbot providers all have converged at ~$20/month) was an effective filter for power users, but it was never going to subsidize the massive operational costs of the free tier, which serves as both a user acquisition funnel and a data collection engine (and thus a fundamental piece of the puzzle, in case you wondered why OpenAI offers a free tier in the first place).

(Short note: the reason AI model inference is expensive for OpenAI compared to search for Google—say, a ChatGPT query vs a Google search query—is not that the former requires more energy than the latter; according to the most recent estimates they’re in the

...This excerpt is provided for preview purposes. Full article content is available on the original publication.