Microsoft's AI Strategy Deconstructed - From Energy to Tokens

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Vertical integration

15 min read

The article explicitly discusses Microsoft's strategy to become 'a truly vertically integrated AI powerhouse, eliminating most of the 3rd party gross margin stack.' Understanding this business strategy concept illuminates Microsoft's competitive approach across chips, infrastructure, models, and applications.

-

Application-specific integrated circuit

12 min read

The article mentions OpenAI's custom chip ASICs and Microsoft Maia as key strategic elements. Readers would benefit from understanding what ASICs are, how they differ from general-purpose processors, and why custom AI chips represent a significant competitive advantage.

Microsoft was at the top of AI in 2023 and 2024, but then a year ago they changed course drastically. They paused their datacenter construction significantly and slowed down their commitments to OpenAI. We called this out a year ago to datacenter model clients and later wrote a newsletter piece about it.

2025 was the story of OpenAI diversifying away from Microsoft, with Oracle, CoreWeave, Nscale, SB Energy, Amazon, and Google all signing large compute contracts with OpenAI directly.

This seems like a dire situation. Today we have a post dissecting Microsoft’s fumble as well as a public interview with Satya Nadella and our dear friend Dwarkesh Patel where we challenged him on their AI strategy and execution.

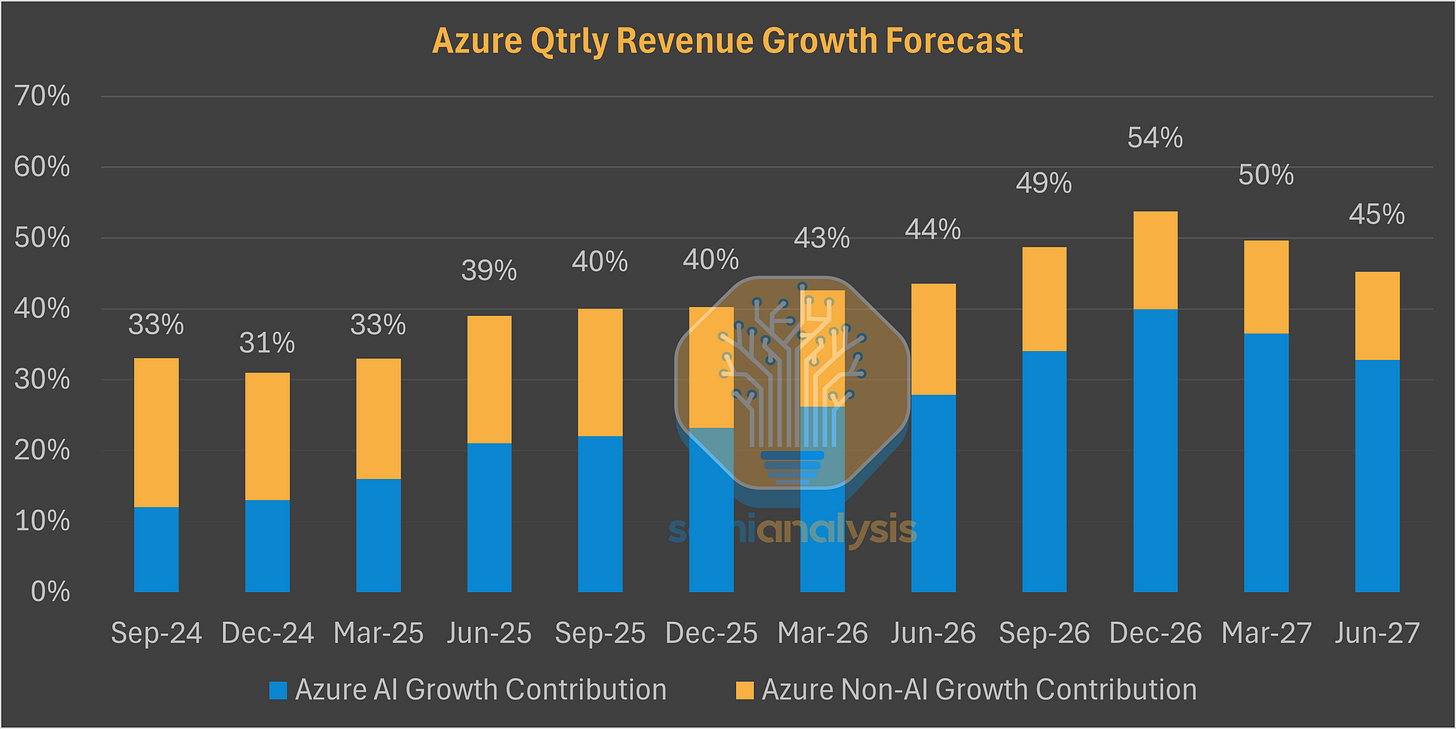

Now Microsoft’s investments in AI are back, and the AI giant has never had such high demand for Accelerated Computing. The Redmond titan has woken up to it going down the wrong path and has dramatically shifted course. With the newly announced OpenAI deal, Azure growth is set to Accelerate in the upcoming quarters as forecasted by our Tokenomics model.

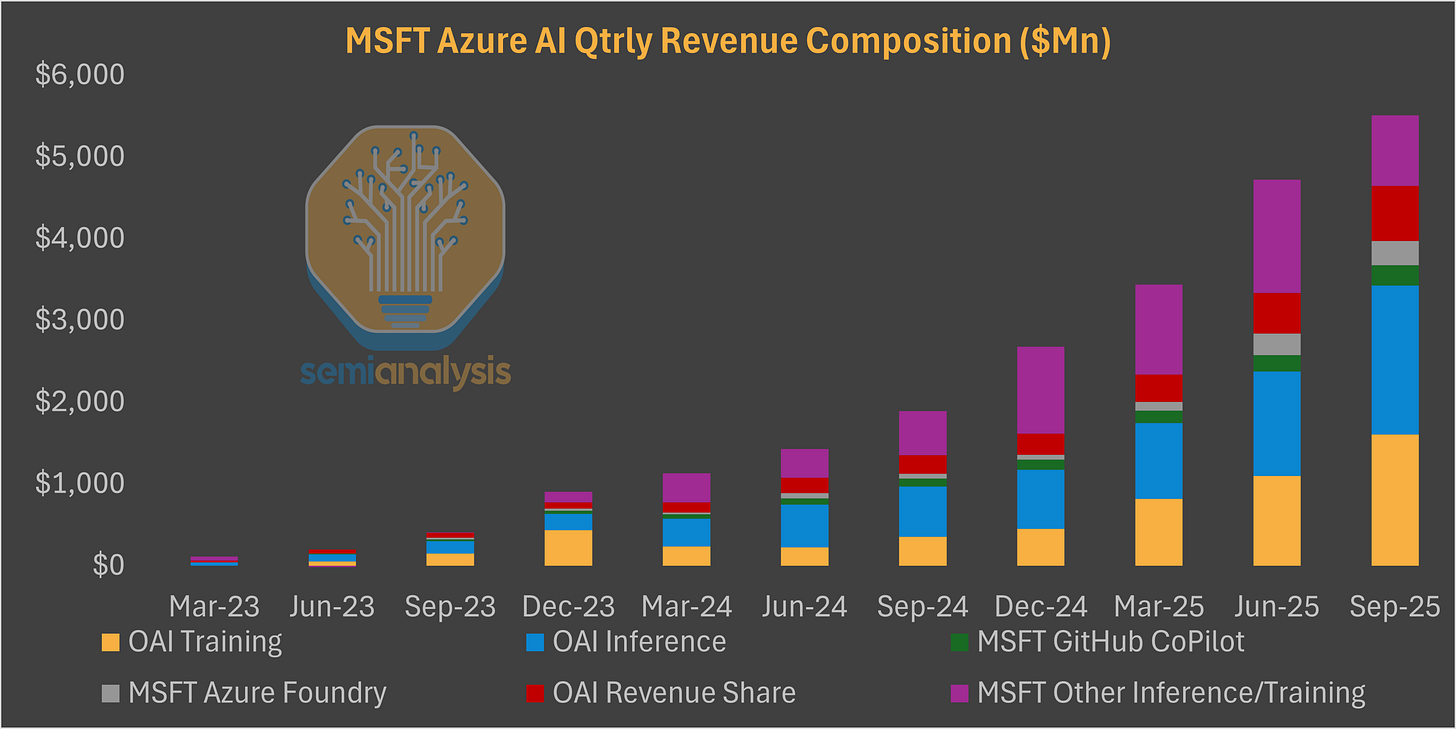

Microsoft plays in every single part of the AI Token Economic Stack, is witnessing accelerated growth, and we expect the trend to continue in coming quarters and years.

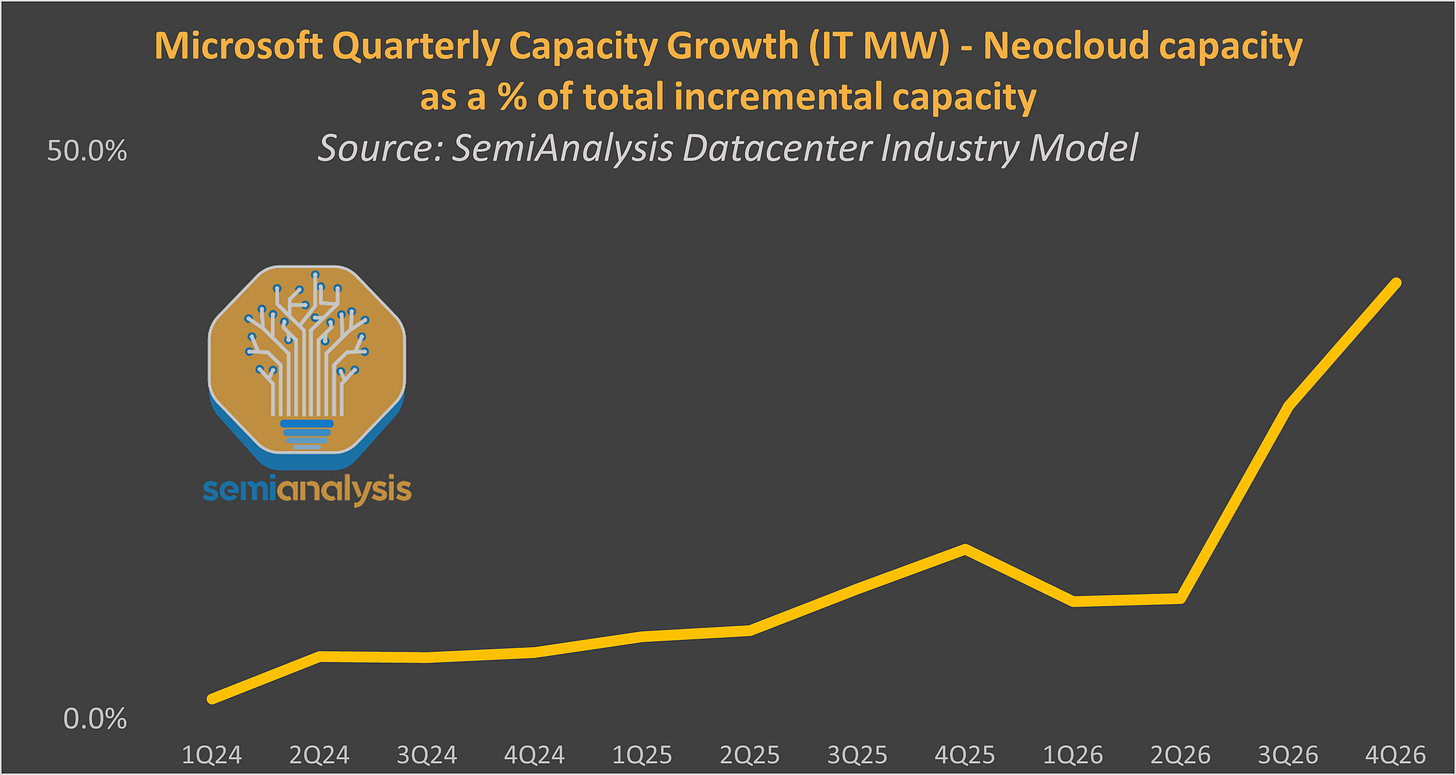

The firm is actively looking for near-term capacity and pulling the trigger on everything it can get its hands on. Self-build, leasing, Neocloud, middle-of-nowhere locations – everything is on the table to accelerate near-term capacity growth (exact numbers available to our Datacenter Model subscribers).

On the hardware side, Microsoft even has access to OpenAI’s Custom Chip IP, the most exciting custom chip ASICs currently in development. Given the trajectory of OpenAI’s ASIC developments look much better than that of Microsoft Maia, it may be that Microsoft ends up using the chip to serve OpenAI models. This dynamic mirros Microsoft’s situation with OpenAI models. While they have access to OpenAI models: they are still trying to train their own foundation model with Microsoft AI. We beleive they’re attempting to become a truly vertically integrated AI powerhouse, eliminating most of the 3rd party gross margin stack, and deliver more intelligence at lower cost than peers.

In this report, we will dive into all aspects of Microsoft’s AI business. We begin by reviewing the history of the OpenAI relationship, covering

...This excerpt is provided for preview purposes. Full article content is available on the original publication.