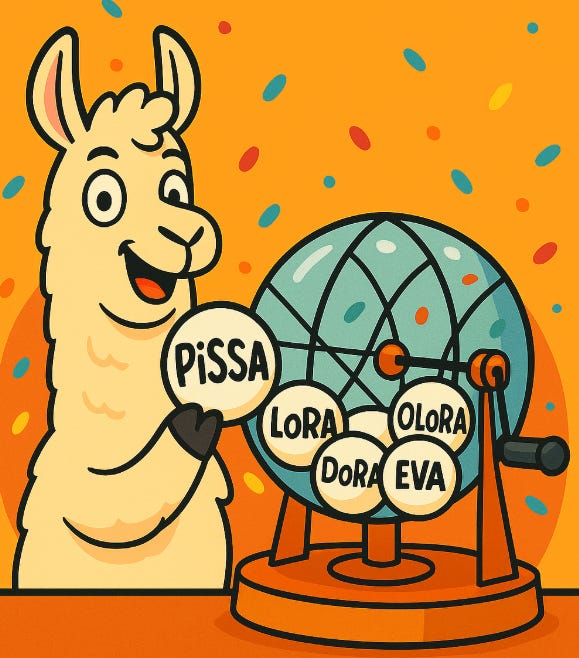

Advanced LoRA Fine-Tuning: How to Pick LoRA, QLoRA, DoRA, PiSSA, OLoRA, EVA, and LoftQ for LLMs

Deep Dives

Explore related topics with these Wikipedia articles, rewritten for enjoyable reading:

-

Low-rank approximation

2 min read

LoRA fundamentally relies on low-rank matrix factorization to reduce parameter count. Understanding the mathematical foundations of low-rank approximation helps readers grasp why decomposing weight matrices into smaller components preserves model capability while dramatically reducing memory.

-

Quantization (signal processing)

13 min read

QLoRA, QDoRA, and LoftQ all involve quantizing model weights to reduce memory. This article explains the core concept of mapping continuous values to discrete levels, which is essential for understanding the precision tradeoffs these techniques make.

-

Singular value decomposition

8 min read

SVD is the mathematical technique underlying several LoRA variants like PiSSA and OLoRA, which use principal components for initialization. Understanding SVD helps readers appreciate how these methods identify the most important directions in weight space.

Advanced LoRA Fine-Tuning: How to Pick LoRA, QLoRA, DoRA, PiSSA, OLoRA, EVA, and LoftQ for LLMs

When it’s done well, LoRA can match full fine-tuning while using a fraction of the memory.

It was introduced in 2021, when open LLMs were scarce and relatively small. Today, we have plenty of models, from a few hundred million to hundreds of billions of parameters. On these larger models, LoRA (or one of its variants) is often the only practical way to fine-tune without spending $10k+.

Originally, LoRA was meant to train small adapters on top of the attention blocks of LLMs. Since then, the community has proposed many optimizations and extensions, including techniques that work with quantized models.

In this article, we’ll look at the most useful, modern approaches to LoRA for adapting LLMs to your task and budget. We’ll review (Q)DoRA, (Q)LoRA, PiSSA, EVA, OLoRA, and LoftQ, compare their performance (with and without a quantized base model, when that’s relevant), and discuss when to pick each method. All of them are implemented in Hugging Face TRL.

You can find my notebook showing how to use these techniques here:

This excerpt is provided for preview purposes. Full article content is available on the original publication.